Jenkins版本(Jenkins2.309)

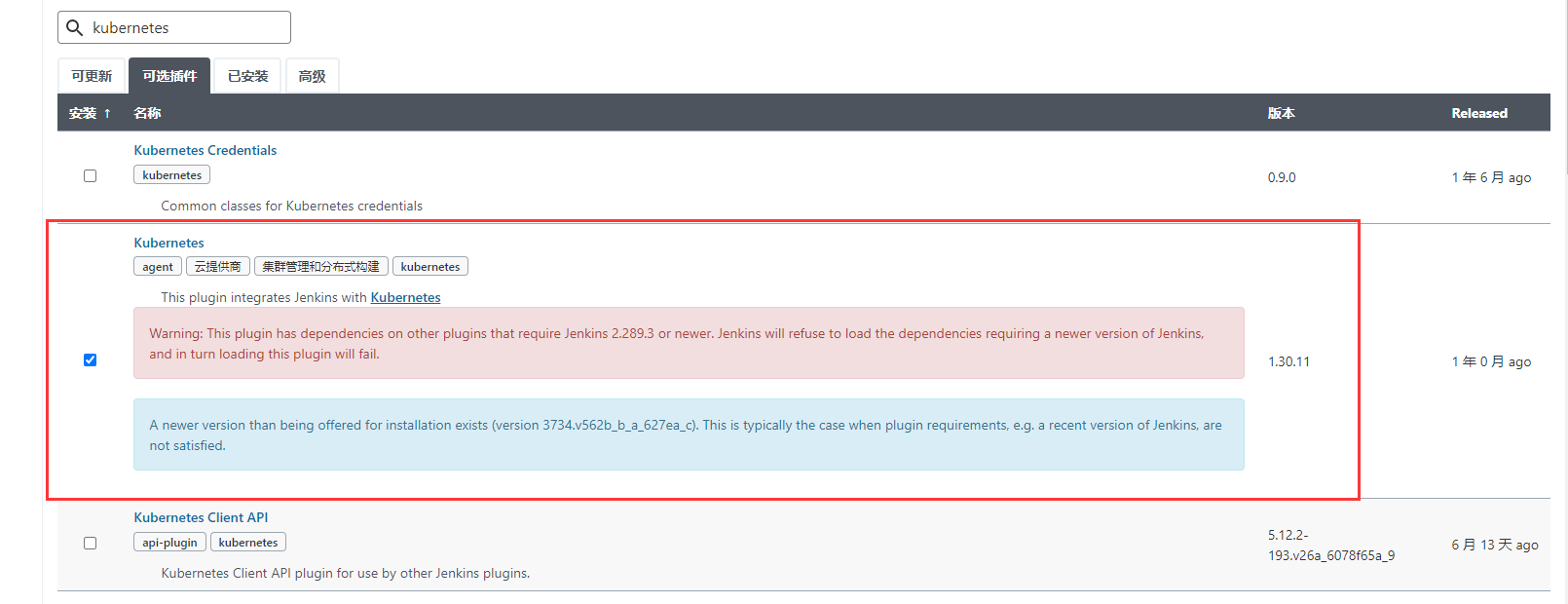

1.安装kubernetes插件

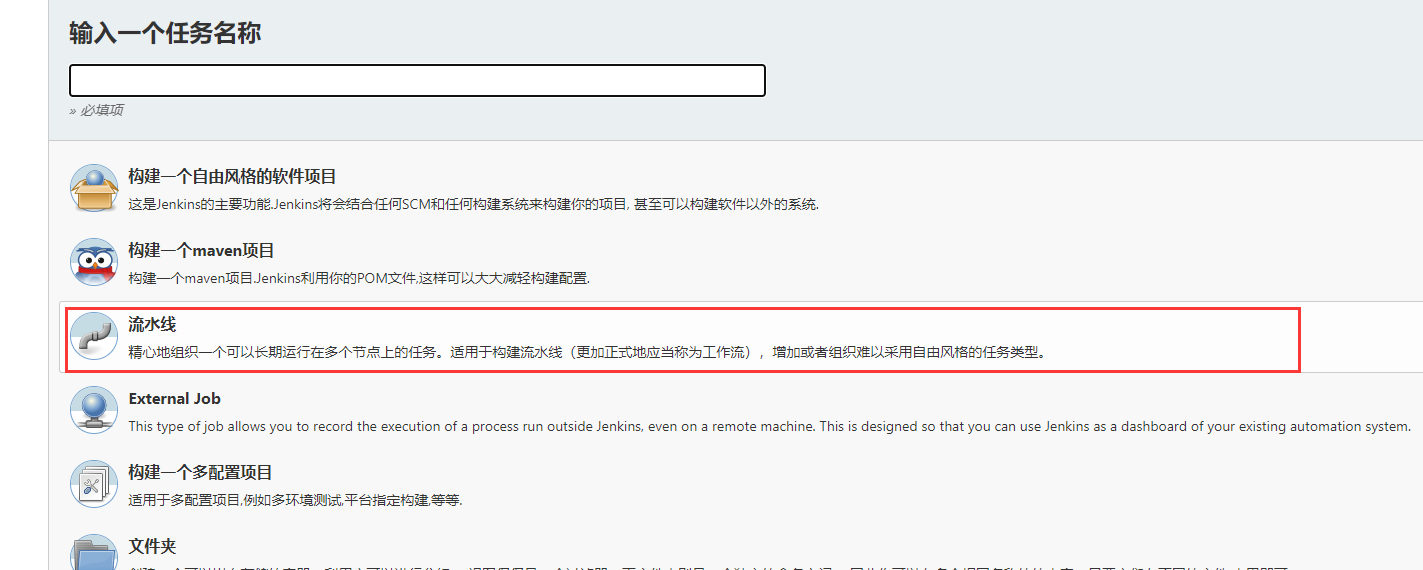

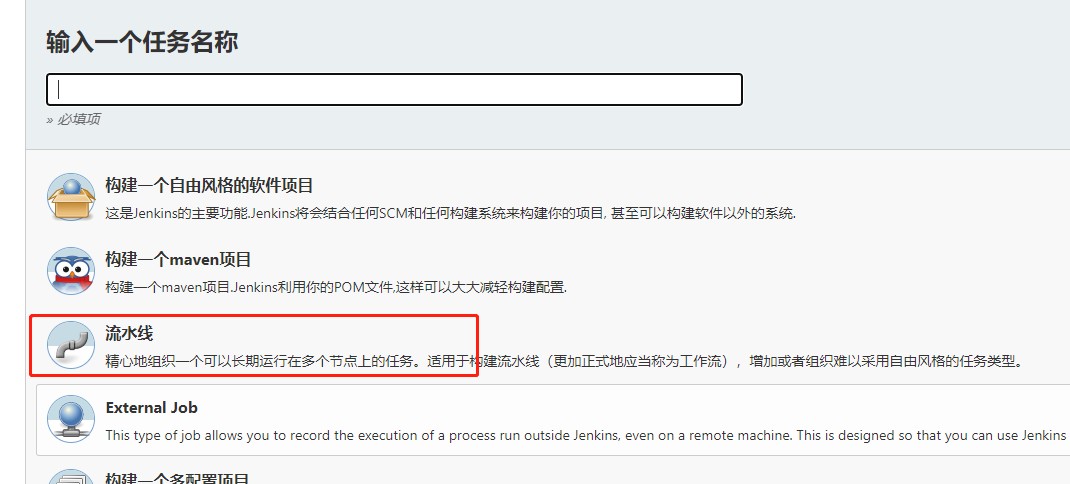

2. 新建流水线项目 指定名称prometheus-test-demo),选择流水线,点击确定

3. 对接 K8S 集群

3.1 申请 K8S 凭据

|

1 |

因为 Jenkins 服务器在 kubernetes 集群之外,所以我们准备以下文件才能从外面连接到 kubernetes 集群。 |

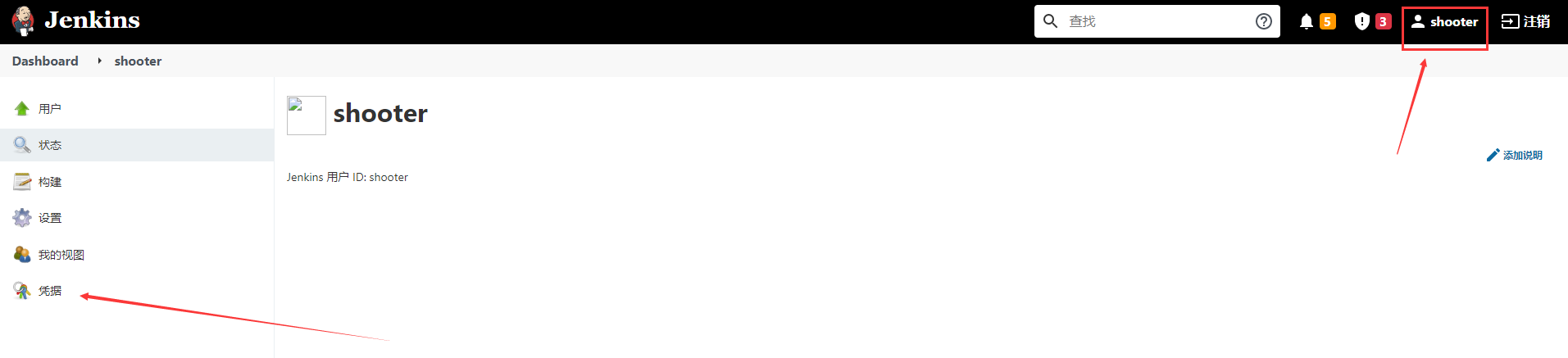

3.2 点击右上角「用户」 → 左下角「凭据」:

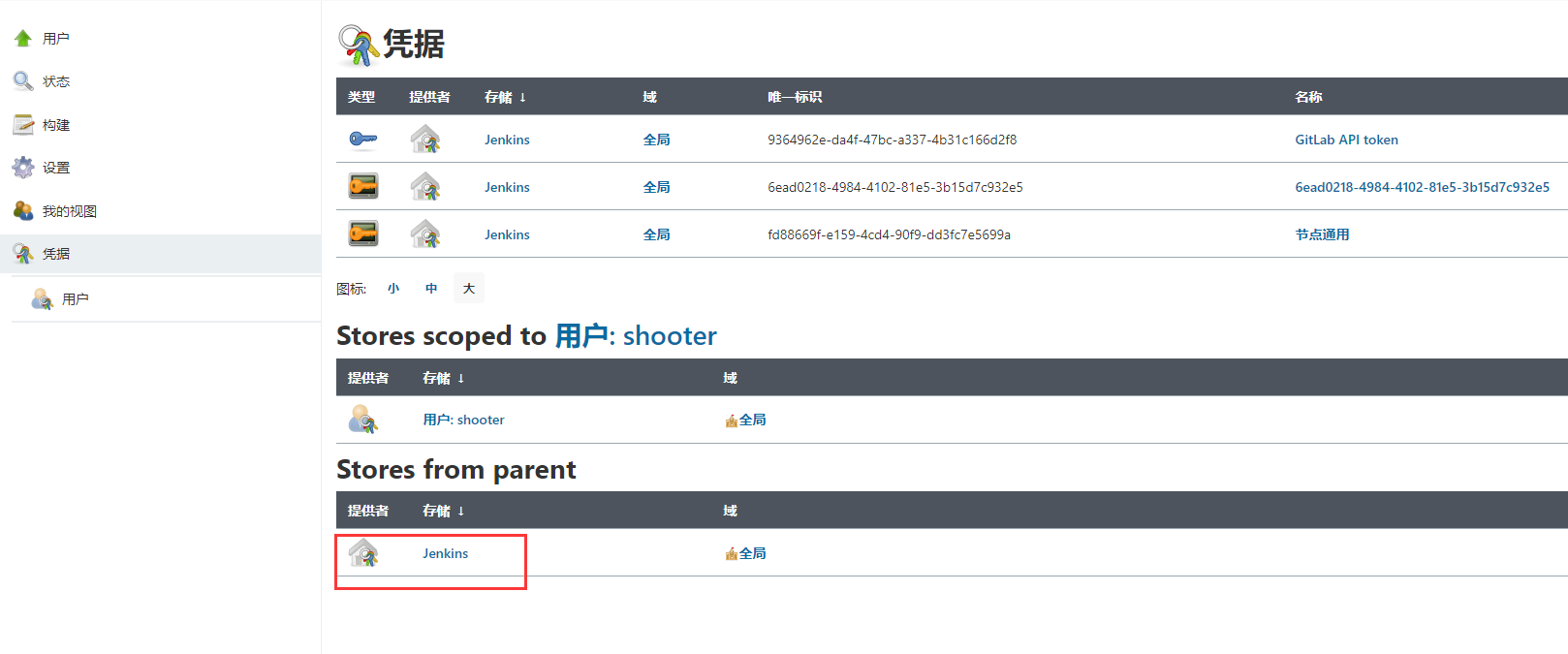

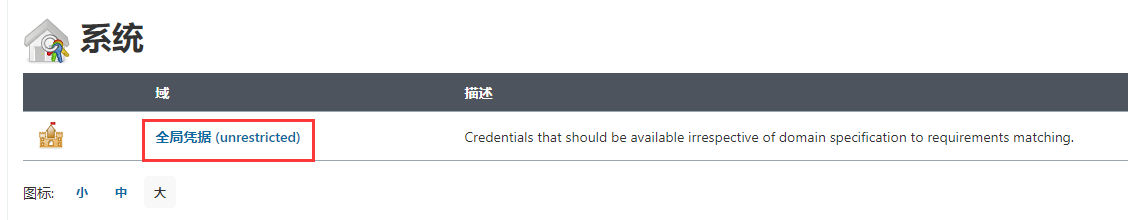

3.3 然后点击 Jenkins,选择全局凭据(Unrestricted)

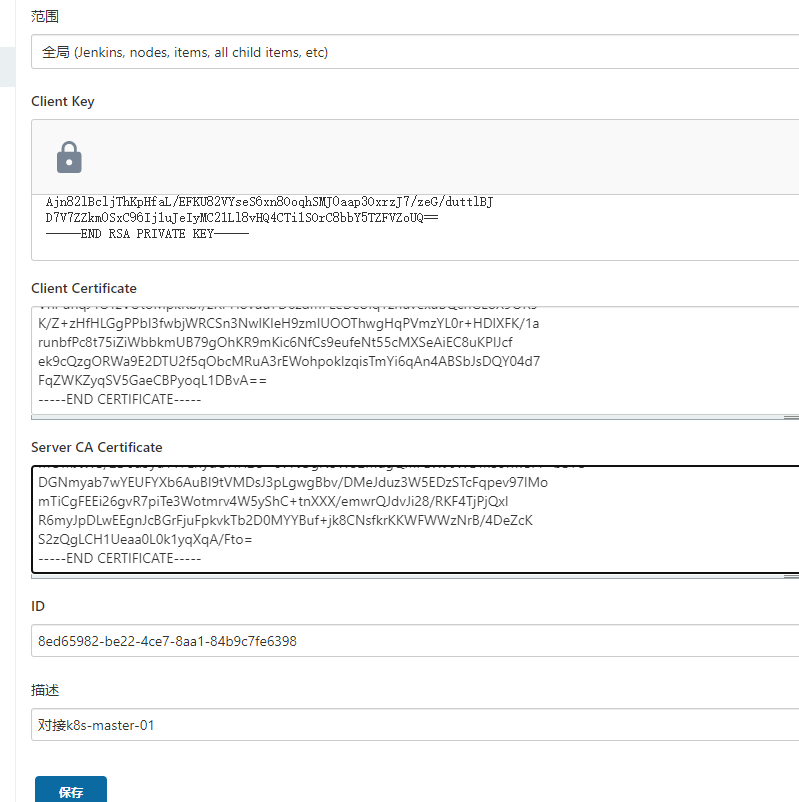

添加凭据,类型选择 X.509 Client Certificate(如果没有这个选项,请安装插件docker pipline)

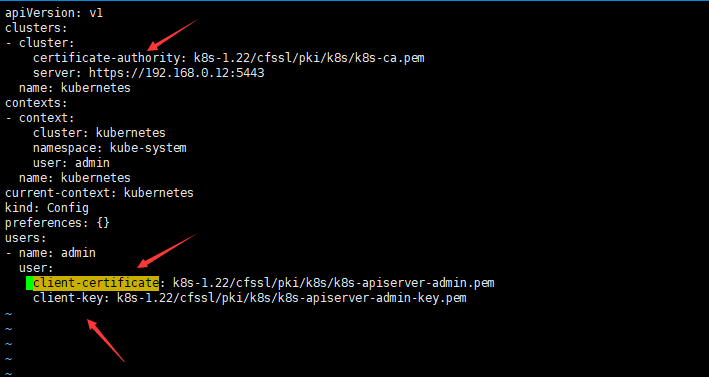

- Client Key: .kube/config文件中 client-key 对应的 key 文件

- Client Certificate: .kube/config文件中 client-certificate 对应的 crt 或是 pem 文件

- Server CA Certificate:.kube/config 文件中 certificate-authority 对应的 crt 或是 pem 文件,K8S 的最高权限证书

- ID:可不填写,默认会自动生成一串字符串,也可以自行设置

- 描述:描述下这个凭据的作用,比如这个可以写 对接 K8S 集群凭据

|

1 |

注意! 这里的key文件直接寻找对应的证书文件即可(如图所示) .kube/config 里嵌入的证书内容直接复制是无法用的,因为是base64加密过的,最好重新生成以下路径形式的,或者直接按照上图去寻找对应的pem文件 |

填写完成后,点击确认

3.4、配置 K8S 集群的对接

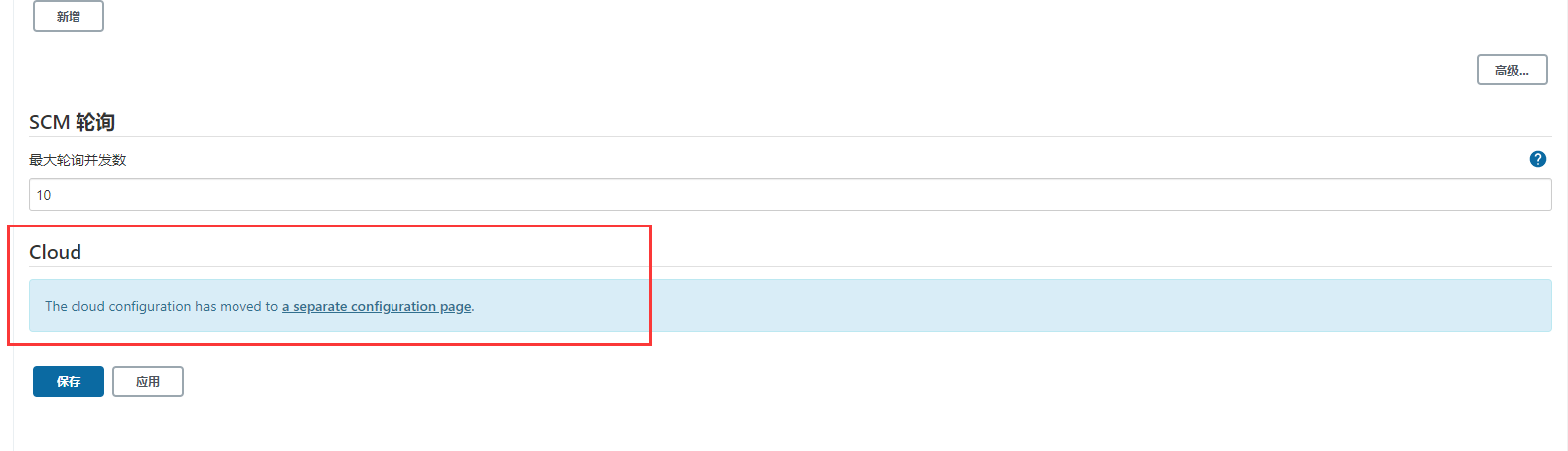

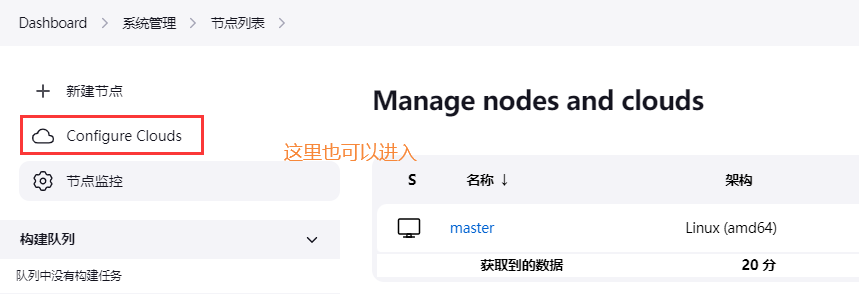

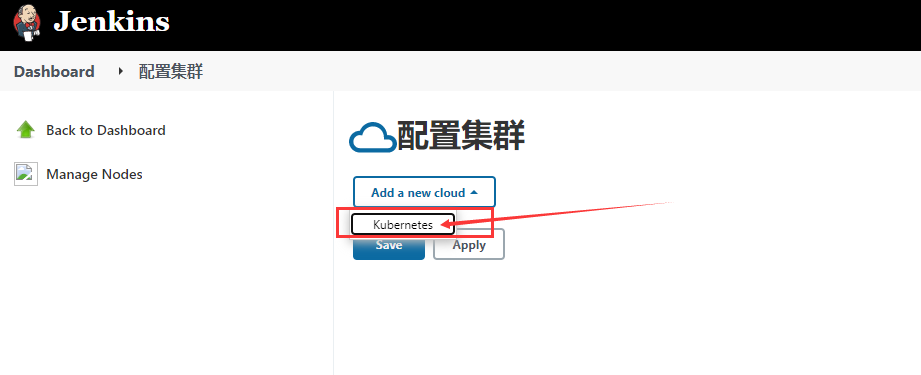

点击 系统管理 → 系统配置 → 滑动到页面最下面 or 节点管理

点击 a separate configuration page

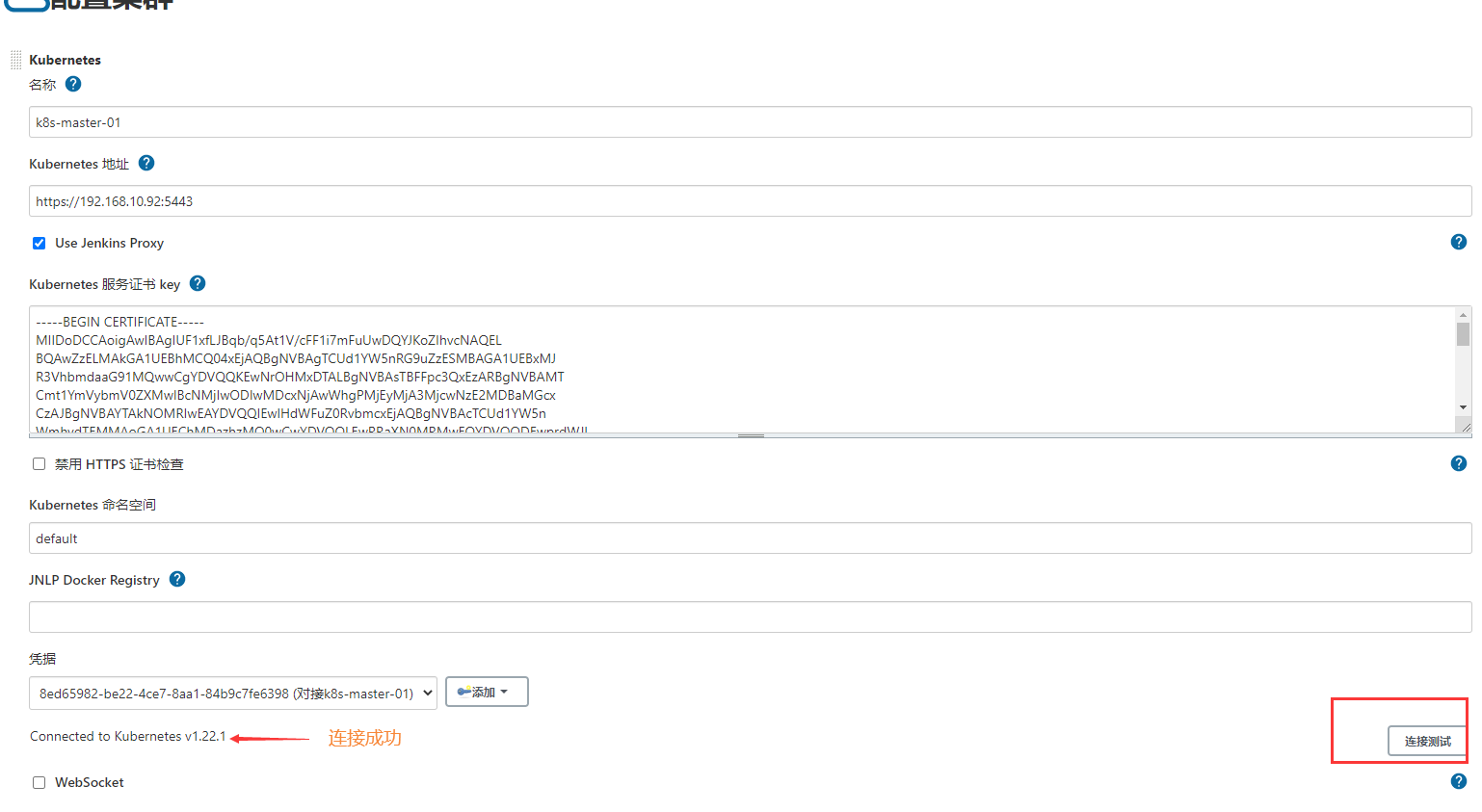

- Kubernetes 地址:kubernetes服务地址,也就是 apiserver 的地址,一般是master 节点 NodeIP+6443 端口

- Kubernetes 服务证书 key:kube-ca.crt/pem文件的内容

- 凭据:刚才创建的 certificate 凭据

- Jenkins 地址:Agent 连接 Jenkins Master 的地址

其他都使用默认配置,点击连接测试,连接测试成功,点击 Save 存储

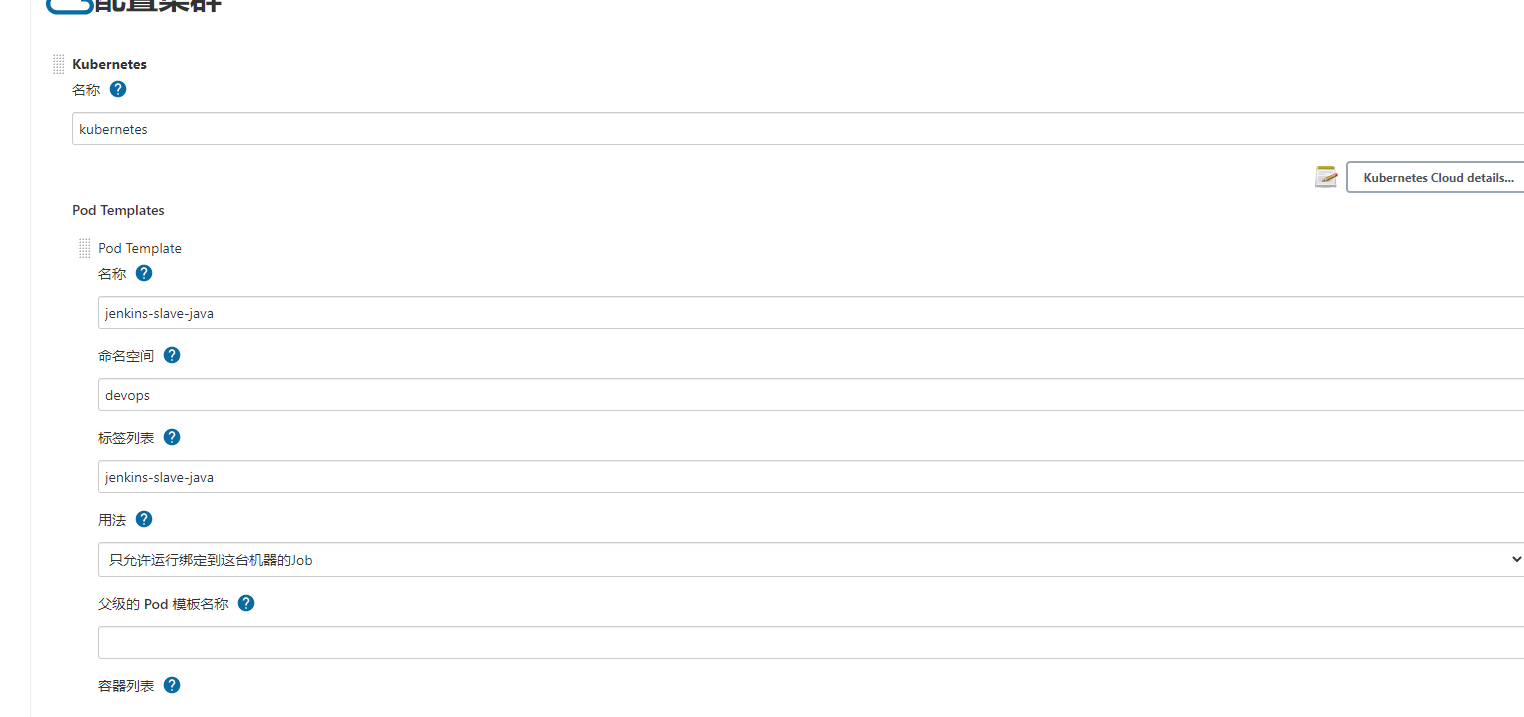

3.5、K8S pod template 配置

|

1 |

Jenkins 的 kubernetes-plugin 在执行构建时会在 kubernetes 集群中自动创建一个 Pod,并在 Pod 内部创建一个名为 jnlp 的容器,该容器会连接 Jenkins 并运行 Agent 程序,形成一个 Jenkins 的 Master 和 Slave 架构,然后 Slave 会执行构建脚本进行构建,但如果构建内容是要创建 Docker Image 就要实现 Docker In Docker 方案(在 Docker 里运行 Docker),如果要在集群集群内部进行部署操作可以使用 kubectl 执行命令,要解决 kubectl 的安装和权限分配问题。 |

|

1 |

为了方便配置一个 Pod Templates,在配置 kubernetes 连接内容的下面,这里的模板只是模板(与类一样使用时还要实例化过程),名称和标签列表不要以为是 Pod 的 name 和 label,这里的名称和标签列表只是 Jenkins 查找选择模板时使用的,Jenkins 自动创建 Pod 的 name 是项目名称+随机字母的组合,所以我们填写 jenkins-slave,命名空间填写对应的 namespace。 |

这边要注意,还要向pod内添加 几个 container

3.6、添加jenskins-slave通信连接容器 【jnlp】

|

1 |

容器名称是jnlp, Docker 镜像填写:jenkins/jnlp-slave:4.13.2-1-jdk11 (注意这里的镜像版本必须和jenkins的环境版本一致,比如都jdk11以上,否则slave起不来),后面的使用 |

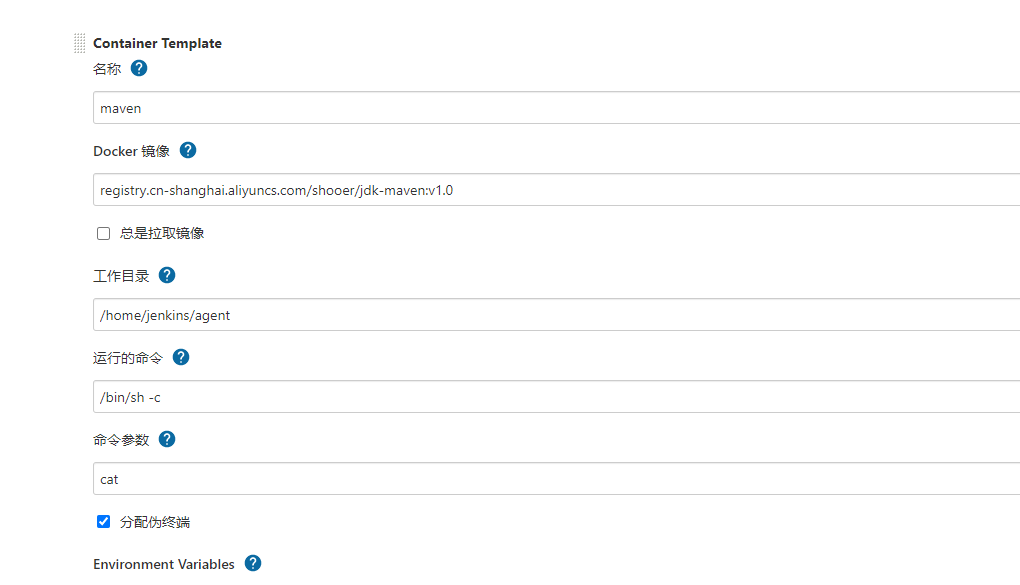

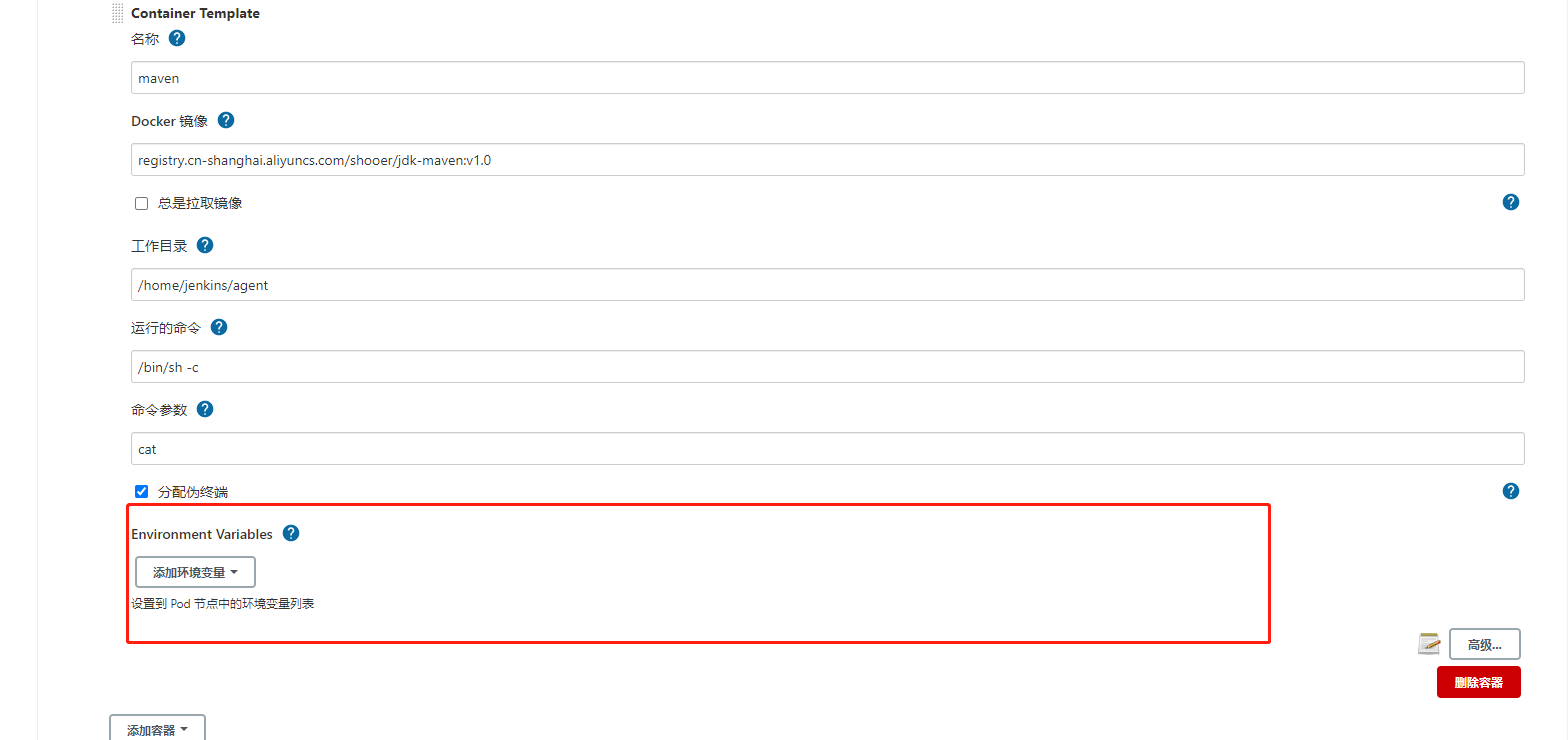

3.7、然后再添加编译容器【maven】

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

容器名称是maven, Docker 镜像填写:registry.cn-shanghai.aliyuncs.com/shooer/jdk-maven:v1.0 #注意镜像自行打包可参考:https://blog.csdn.net/cloud_engineer/article/details/126690346) ####本人自用 FROM centos:7.9.2009 LABEL maintainer="shooter<tuobalongshen@126.com>" ADD jdk-8u231-linux-x64.tar.gz /usr/local ADD apache-maven-3.8.6-bin.tar.gz /usr/local ENV JAVA_HOME=/usr/local/jdk1.8.0_231 ENV MAVEN_HOME=/usr/local/apache-maven-3.8.6 ENV CLASSPATH=.:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib/dt.jar ENV PATH=$JAVA_HOME/bin:$MAVEN_HOME/bin:$PATH RUN ln -s $JAVA_HOME/bin/java /usr/bin/java RUN ln -s $MAVEN_HOME/bin/mvn /usr/bin/mvn RUN ln -s touch $MAVEN_HOME/maven-repertory WORKDIR /root |

|

1 |

环境变量依据需求添加,注意关闭全局的java和mvn环境变量,否则镜像里调用的是jenkins全局变量,会报错的. |

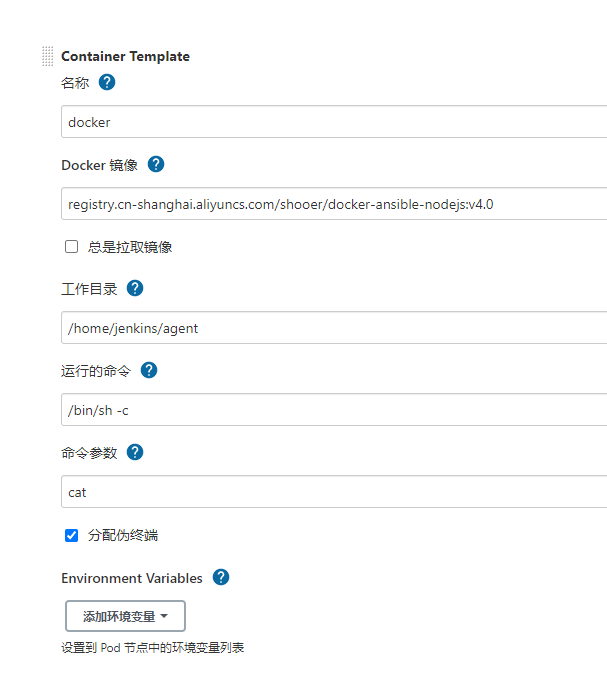

3.8、安装镜像打包容器【docker】

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

容器名称是docker, Docker 镜像填写:registry.cn-registry.cn-shanghai.aliyuncs.com/shooer/docker-ansible-nodejs:v4.0 #此镜像包含ansible与nodejs 本人自用 注意镜像自行打包Dockerfile可参考: FROM centos:7.9.2009 LABEL maintainer="shooter<tuobalongshen@126.com>" RUN yum install -y yum-utils device-mapper-persistent-data lvm2 RUN yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo RUN yum install -y docker-ce-20.10.9-3.el7 COPY ./docker-compose /usr/local/bin RUN chmod +x /usr/local/bin/docker-compose RUN systemctl enable docker WORKDIR /root 例: docker build -t docker:v20.10.9 . docker run -it -d -v /var/run/docker.sock:/var/run/docker.sock --name ddd docker:v20.10.9 |

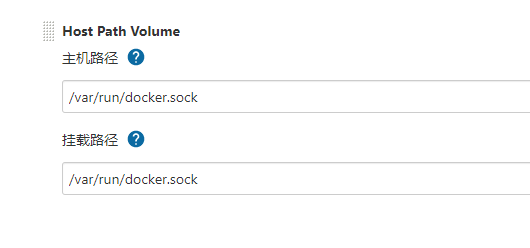

启动的时候记得把本机docker【/var/run/docker.sock】映射进去

|

1 2 3 4 |

宿主机的/var/run/docker.sock被映射到了容器内,有以下两个作用: 在容器内只要向/var/run/docker.sock发送http请求就能和Docker Daemon通信了,可以做的事情前面已经试过了,官方提供的API文档中有详细说明,镜像列表、容器列表这些统统不在话下; 如果容器内有docker文件,那么在容器内执行docker ps、docker-compose这些命令,和在宿主机上执行的效果是一样的,因为容器内和宿主机上的docker文件虽然不同,但是他们的请求发往的是同一个Docker Daemon; |

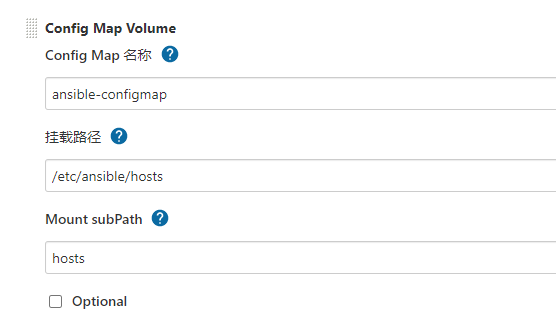

ansible configmap挂载

|

1 2 3 4 5 6 7 8 9 10 11 |

apiVersion: v1 kind: ConfigMap metadata: name: maven-configmap namespace: devops data: hosts: |- [dev] 192.168.0.206 ansible_ssh_user=root ansible_ssh_pass=456123 [fat] 192.168.0.205 ansible_ssh_user=root ansible_ssh_pass=123456 |

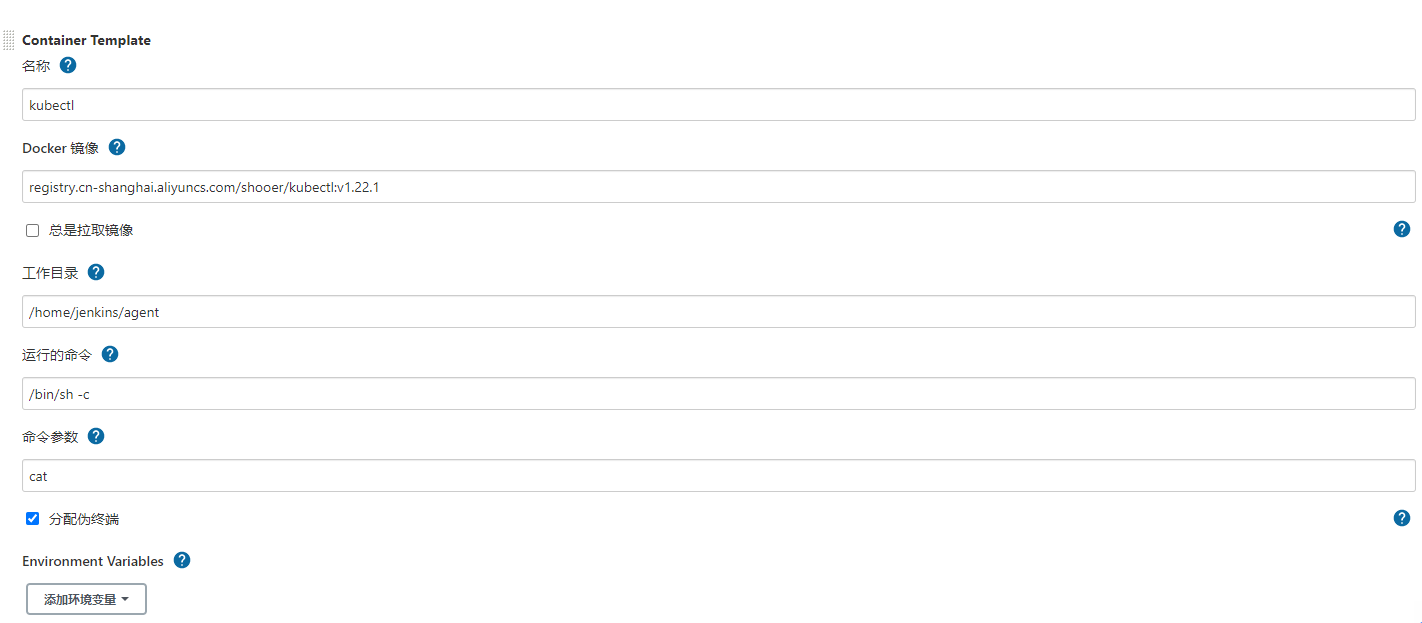

3.9、安装kubectl容器【kubectl】

|

1 |

最后在添加一个container,容器名称是 kubectl,是这个容器里面有 kubectl 的命令,镜像名称填写registry.cn-shanghai.aliyuncs.com/shooer/kubectl:v1.22.1 (需要自己打镜像,可以看这里:https://blog.csdn.net/A3536232/article/details/120270463) |

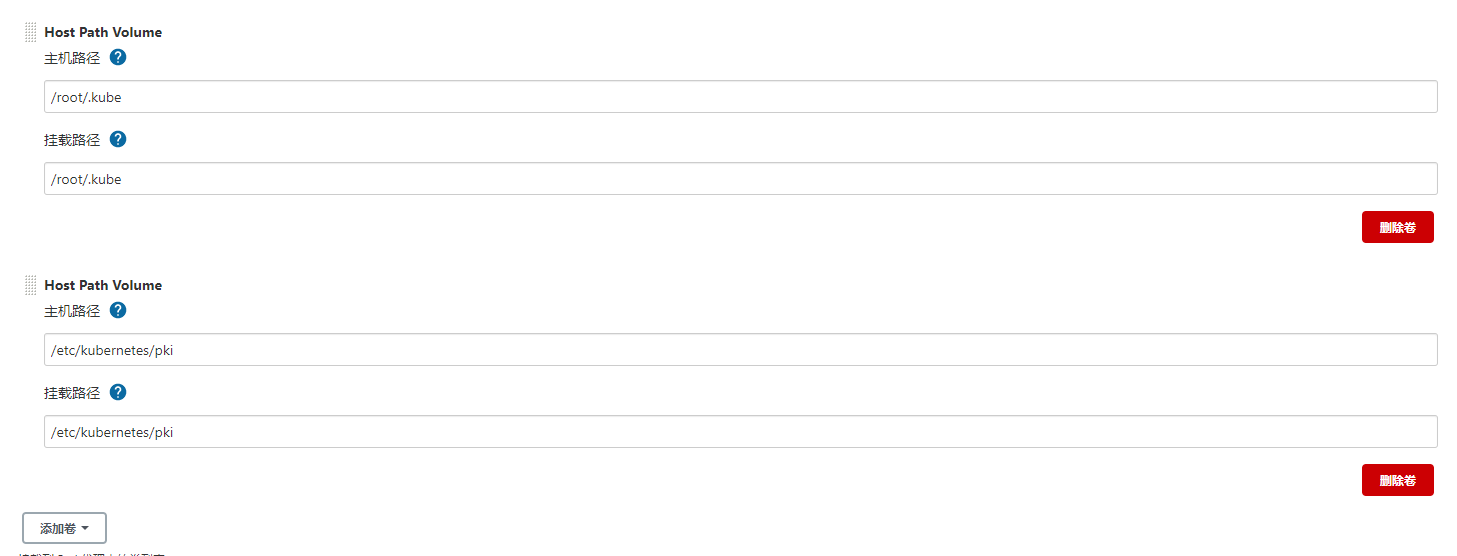

下面添加卷 Host Path Volume:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

/var/run/docker.sock /root/.kube/ /etc/kubernetes/pki 这边便是为了 jenkins-slave 下有足够的权限可以执行 docker 及 kubectl 部署到 k8s 集群的权限,因为 jenkins-slave pod 有可能会被调度到任一 worker 节点,所以所有的 worker 节点上都必须有 /root/.kube/、/etc/kubernetes/pki,配置好之后点击保存。 为worker节点配置映射的文件和证书目录,上面容器内映射会用到 为worker节点分发 scp -r .kube root@192.168.10.95:/root/ scp -r .kube root@192.168.10.98:/root/ scp -r .kube root@192.168.10.99:/root/ scp -r pki root@192.168.10.95:/etc/kubernetes/ scp -r pki root@192.168.10.98:/etc/kubernetes/ scp -r pki root@192.168.10.99:/etc/kubernetes/ |

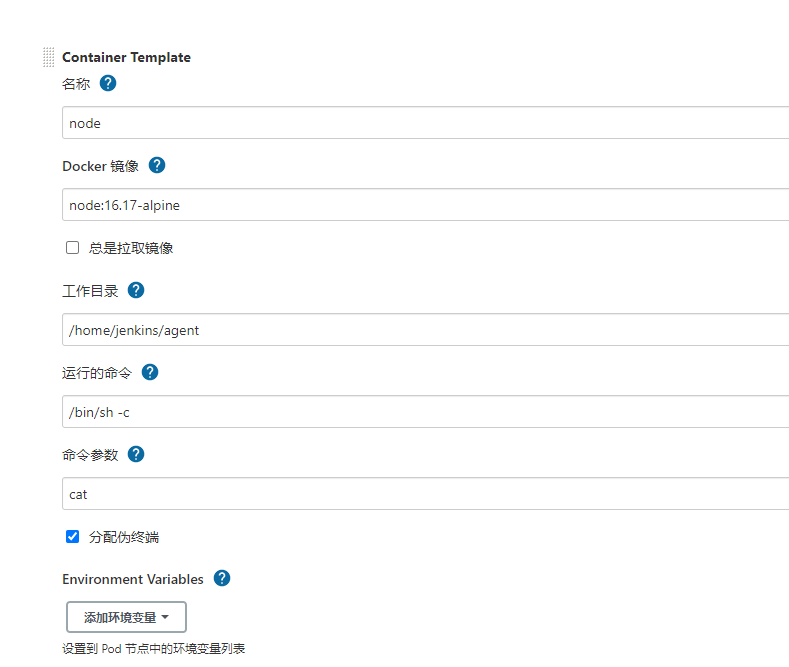

如果要打包前端项目,也可以单独设置nodejs镜像,也可以如上面和docker容器镜像做在一起

|

1 |

再添加一个container,容器名称是 node,是这个容器里面有 nodejs的命令,镜像名称填写node:16.17-alpine 打包web项目会调用 |

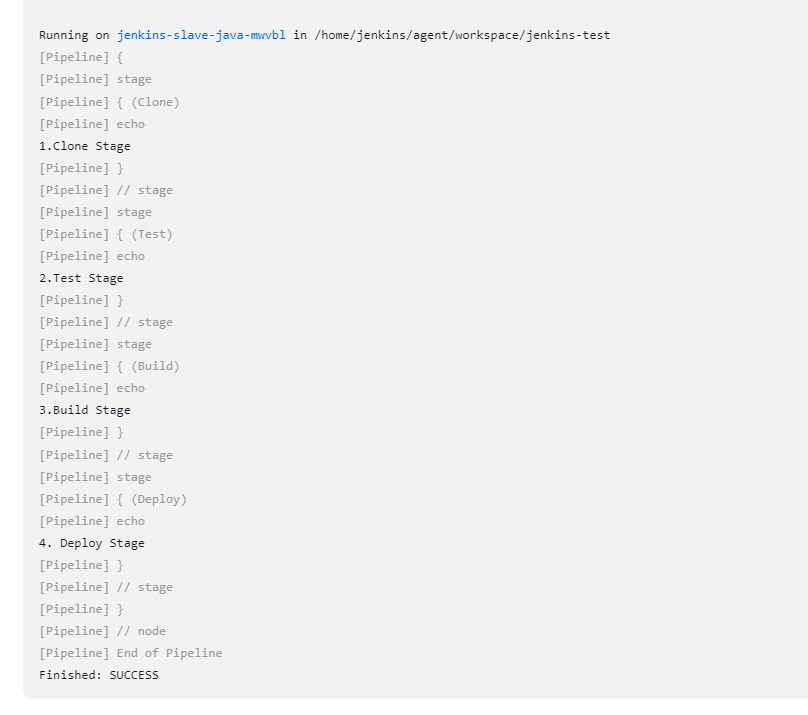

4、新建流水线项目,并且立即执行

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 |

pipeline { agent { node { label 'jenkins-slave-java' } } stages { stage('Init') { steps{ script{ println "welcome to Nick learn" } } } stage('maven') { steps{ script{ container('maven') { sh 'java -version' sh 'mvn -v' } } } } stage('docker') { steps{ script{ container('docker') { sh 'docker-compose -v' } } } } stage('kubectl') { steps{ script{ container('kubectl') { sh 'pwd' sh 'kubectl get nodes' } } } } } } |

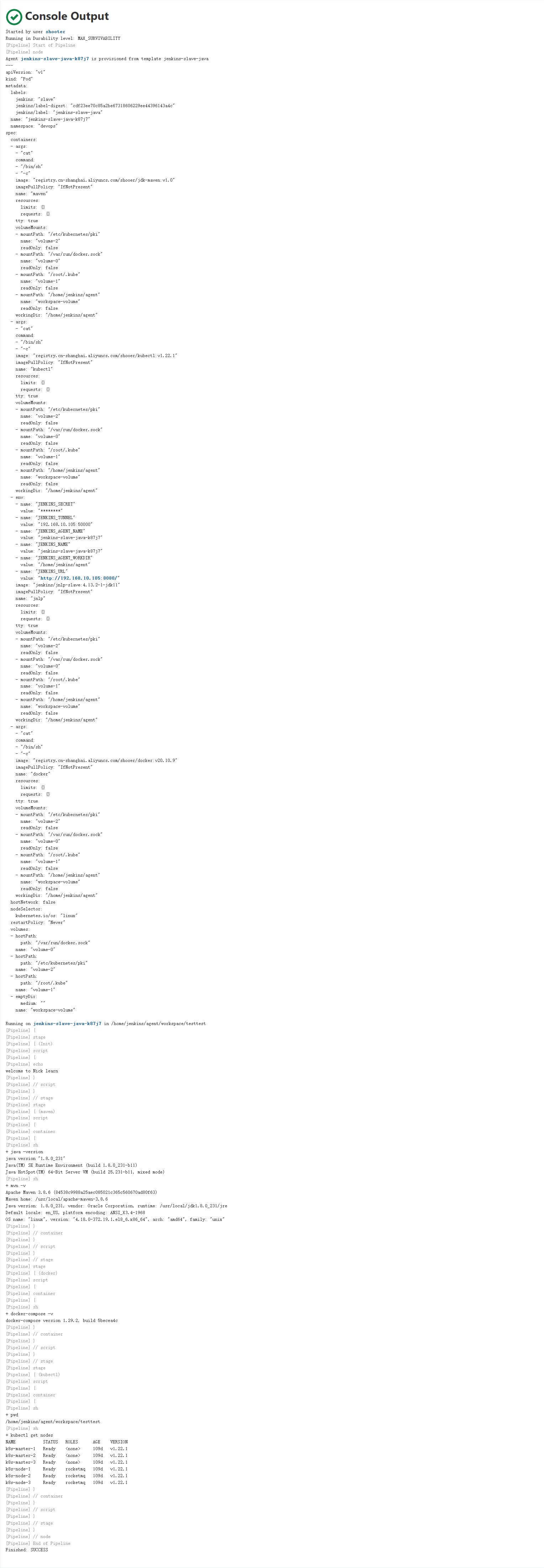

4.1、执行结果:

5、常见错误:

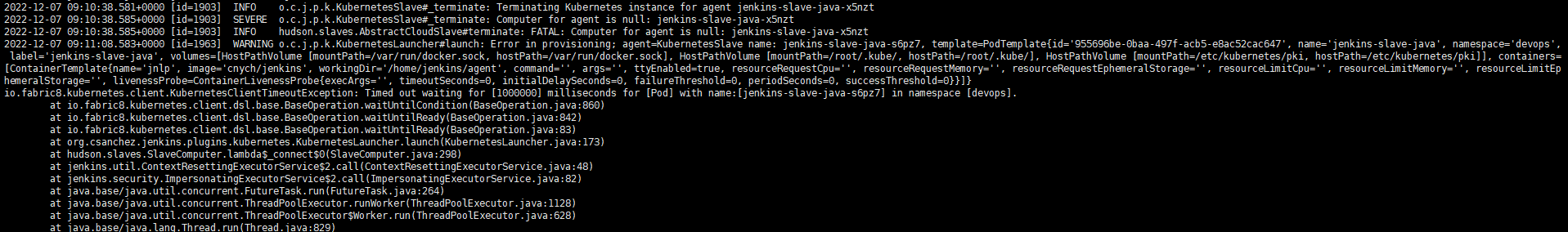

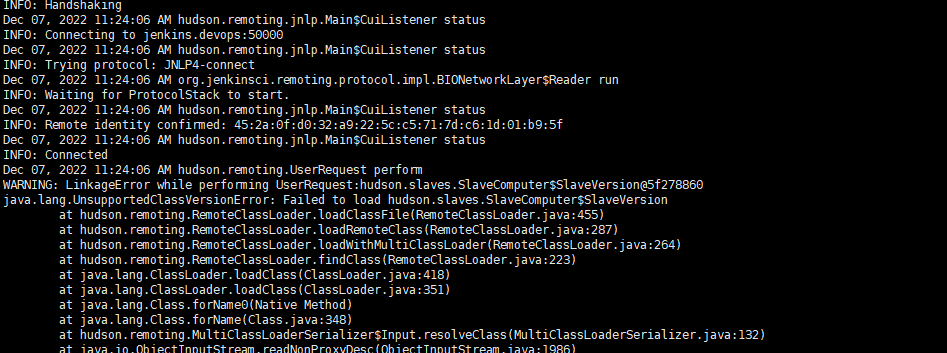

5.1、java.lang.UnsupportedClassVersionError: Failed to load hudson.slaves.SlaveComputer$SlaveVersion

5.2、查看slave容器错误

|

1 2 |

WARNING: LinkageError while performing UserRequest:hudson.slaves.SlaveComputer$SlaveVersion@5f278860 java.lang.UnsupportedClassVersionError: Failed to load hudson.slaves.SlaveComputer$SlaveVersion |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

由于 jenkins 服务的jdk是11,所以 jnlp-slave镜像的jdk需要是11,两种需要保持一致。所以jenkins/jnlp-slave这个镜像的jdk也需要11,两种需要保持一致否则, k8s在调度jnlp-slave时,因jdk版本不一致,会上面的错误(如下): INFO: Connected Dec 07, 2022 11:24:06 AM hudson.remoting.UserRequest perform WARNING: LinkageError while performing UserRequest:hudson.slaves.SlaveComputer$SlaveVersion@5f278860 java.lang.UnsupportedClassVersionError: Failed to load hudson.slaves.SlaveComputer$SlaveVersion at hudson.remoting.RemoteClassLoader.loadClassFile(RemoteClassLoader.java:455) at hudson.remoting.RemoteClassLoader.loadRemoteClass(RemoteClassLoader.java:287) at hudson.remoting.RemoteClassLoader.loadWithMultiClassLoader(RemoteClassLoader.java:264) at hudson.remoting.RemoteClassLoader.findClass(RemoteClassLoader.java:223) at java.lang.ClassLoader.loadClass(ClassLoader.java:418) at java.lang.ClassLoader.loadClass(ClassLoader.java:351) at java.lang.Class.forName0(Native Method) at java.lang.Class.forName(Class.java:348) at hudson.remoting.MultiClassLoaderSerializer$Input.resolveClass(MultiClassLoaderSerializer.java:132) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1986) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1850) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2160) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1667) at java.io.ObjectInputStream.readObject(ObjectInputStream.java:503) at java.io.ObjectInputStream.readObject(ObjectInputStream.java:461) at hudson.remoting.UserRequest.deserialize(UserRequest.java:289) at hudson.remoting.UserRequest.perform(UserRequest.java:189) at hudson.remoting.UserRequest.perform(UserRequest.java:54) at hudson.remoting.Request$2.run(Request.java:376) at hudson.remoting.InterceptingExecutorService.lambda$wrap$0(InterceptingExecutorService.java:78) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at hudson.remoting.Engine$1.lambda$newThread$0(Engine.java:122) at java.lang.Thread.run(Thread.java:748) Caused by: java.lang.UnsupportedClassVersionError: hudson/slaves/SlaveComputer$SlaveVersion has been compiled by a more recent version of the Java Runtime (class file version 55.0), this version of the Java Runtime only recognizes class file versions up to 52.0 at java.lang.ClassLoader.defineClass1(Native Method) at java.lang.ClassLoader.defineClass(ClassLoader.java:756) at java.lang.ClassLoader.defineClass(ClassLoader.java:635) at hudson.remoting.RemoteClassLoader.loadClassFile(RemoteClassLoader.java:453) ... 24 more 错误参考处:https://developer.aliyun.com/article/1005052 |

5.3、再来看下面一段pipline

|

1 |

这里的agent,是 jenkins/jnlp-slave:4.13.2-1-jdk11,这是镜像是jenkins官方提供的slave,基于 这个镜像,定义stages,并在当前容器,实行sh |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

pipeline { agent { kubernetes { yaml """ apiVersion: v1 kind: Pod metadata: labels: agent: test spec: containers: - name: jnlp image: jenkins/jnlp-slave:4.13.2-1-jdk11 imagePullPolicy: IfNotPresent tty: true """ } } stages { stage('Run maven') { steps { container('jnlp') { sh 'java -version' } } } } } |

|

1 |

发布前端可参考:https://zhuanlan.zhihu.com/p/408701835 |

5.4、pipline执行完成

5.5、mvn执行报JAVA_HOME目录设置有误

|

1 2 3 4 |

+ mvn The JAVA_HOME environment variable is not defined correctly This environment variable is needed to run this program NB: JAVA_HOME should point to a JDK not a JRE |

|

1 2 3 4 5 6 7 |

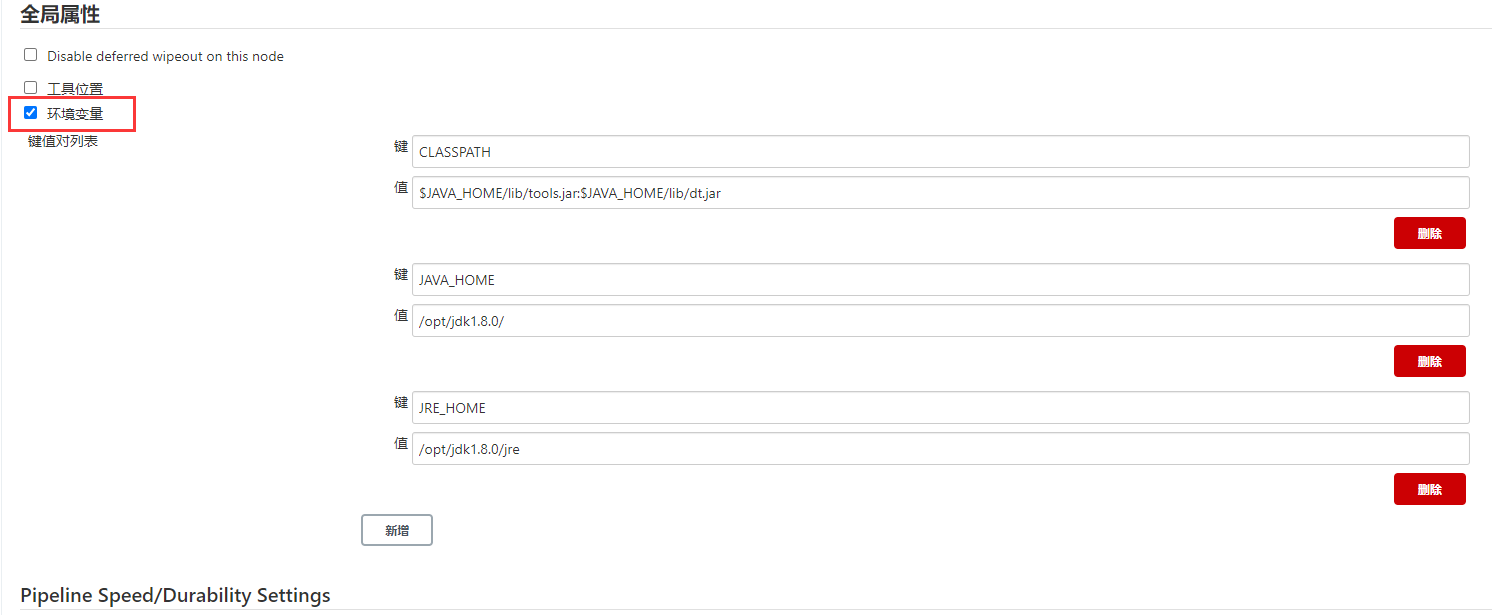

这个错误是因为JAVA_HOME环境变量的设定问题: jenkins环境变量优先级为: 1. 全局环境变量 -> Container Template 局部环境变量 -> Dockerfile最低 如果你使用maven镜像启动pod会首先使用jenkins全局环境变量, 这时候如果你镜像内的环境目录和jenkins全局环境变量设置的目录不一致,则会出现这种情况。 如果jenkins使用k8s作为动态slave建议关闭jenkins全局环境变量。否则Dockerfile里设定的环境变量会被全局覆盖掉,然后报错。 |

解决办法:

|

1 |

检查全局环境变量[系统配置 -> 全局属性->环境变量 如下图],还有局部环境变量是否已经设置,然后关闭即可。默认使用镜像内部的环境变量就ok |

相关挂载和配置文件挂载解析

全局:

局部:

6、为Maven挂载配置文件和本地仓库

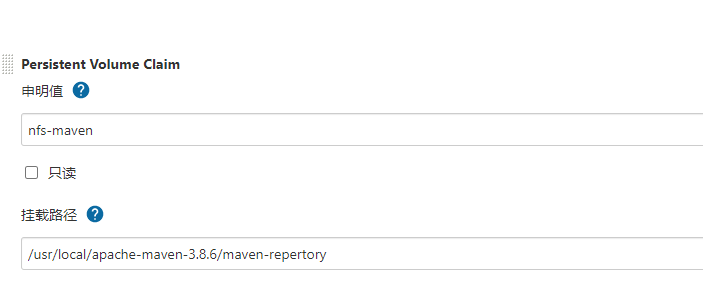

6.1、创建pvc挂载卷,挂载到本地仓库

|

1 2 3 4 5 6 7 8 9 10 11 12 |

kind: PersistentVolumeClaim apiVersion: v1 metadata: name: nfs-maven namespace: devops spec: accessModes: - ReadWriteOnce storageClassName: nfs-csi resources: requests: storage: 20Gi |

|

1 2 |

PVC name: nfs-maven 挂载路径填写: /usr/local/apache-maven-3.8.6/maven-repertory |

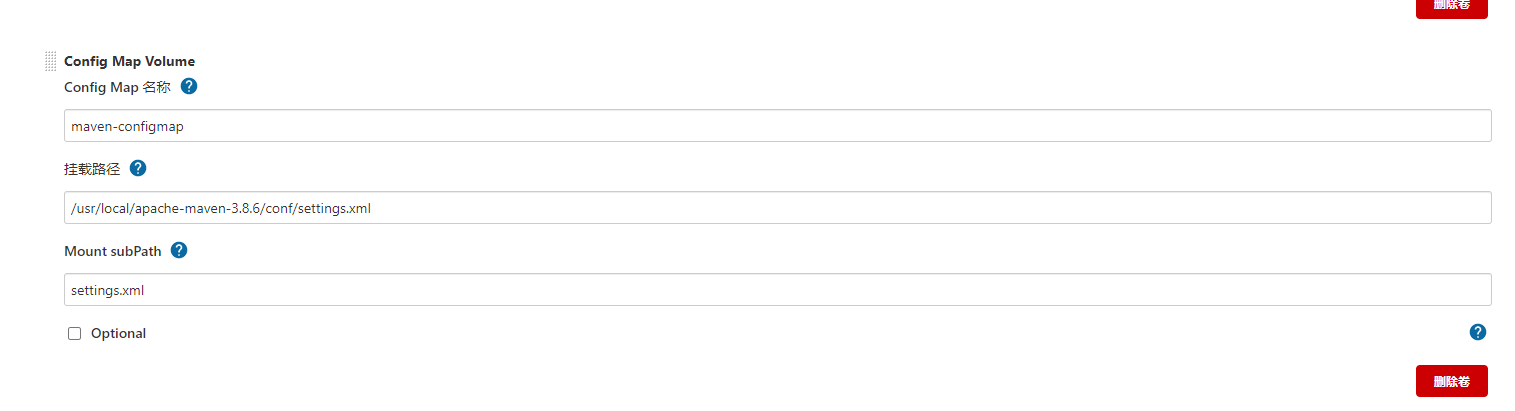

6.2、创建配置文件configmap(注意修改配置文件当中的相关仓库路径和私服地址以及私服登录账号密码)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 |

apiVersion: v1 kind: ConfigMap metadata: name: maven-configmap namespace: devops data: settings.xml: |- <?xml version="1.0" encoding="UTF-8"?> <settings xmlns="http://maven.apache.org/SETTINGS/1.2.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/SETTINGS/1.2.0 http://maven.apache.org/xsd/settings-1.2.0.xsd"> <localRepository>/usr/local/apache-maven-3.8.6/maven-repertory</localRepository> <pluginGroups> <pluginGroup>com.spotify</pluginGroup> </pluginGroups> <proxies> </proxies> <servers> <server> <id>maven-public</id> <username>admin</username> <password>123456</password> </server> <server> <id>releases</id> <username>admin</username> <password>123456</password> </server> <server> <id>snapshots</id> <username>admin</username> <password>123456</password> </server> </servers> <mirrors> <mirror> <id>maven-public</id> <name>maven-public</name> <mirrorOf>*</mirrorOf> <url>http://192.168.10.102:8081/repository/maven-public/</url> </mirror> </mirrors> <profiles> </profiles> </settings> |

|

1 |

如果不设置subPath,则/usr/local/apache-maven-3.8.6/conf/settings.xml将被当成一个目录,根据Linux挂载的概念,该目录下的所有文件会被覆盖. |

7、pipline本人自用Jenkinsfile

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 |

pipeline { agent { node { label 'jenkins-slave-java' } } options { timestamps() skipDefaultCheckout() disableConcurrentBuilds() timeout(time: 1, unit: 'HOURS') //retry(3) } environment{ CPORT='80' RNAME='local' JVMOPS="'${JVMOPS}'" BUILD_RELY_SHELL='mvn clean deploy -U' BUILD_SHELL='mvn clean package -U -DskipTests' TEST_SHELL='mvn test' MIRRORING='192.168.0.211:5000' CACHE_DIR='./$CI_PROJECT_NAME-server/target' CI_PROJECT_NAME="${JOB_NAME}" CI_COMMIT_SHORT_SHA="${rand}" HARBOR_CI_REGISTRY_USER='xiaozi' HARBOR_CI_REGISTRY_PASSWORD='CKIDx3245' HARBOR_CI_REGISTRY='xzharbor.xiaozikeji.cn' PINPOINT='192.168.0.197' ENVS="${PRO}" } stages { stage('Init') { steps{ echo "当前项目目录:${WORKSPACE}" echo "当前构建分支:${GIT_BRANCH}" echo "JOB_NAME: ${JOB_NAME}" echo "JOB_BASE_NAME: ${JOB_BASE_NAME}" echo "BUILD_ID: ${BUILD_ID}" echo "BUILD_NUMBER: ${BUILD_NUMBER}" echo "当前发布环境: ${PRO}" echo "xmx: ${JVMOPS}" script{ array=["uat","fat","dev","sim","app1","app2"] if ("$array[*]" =~ "${PRO}"){ echo "当前发布环境指向${PRO}" }else{ echo "未发现的环境${PRO}请检查..." sh "exit 1" } if ("$PRO" =~ "app"){ JVMOPS='"-Xms1024m -Xmx1024m -XX:MetaspaceSize=512m -XX:MaxMetaspaceSize=512m"' PINPOINT="172.30.48.46" MIRRORING="${HARBOR_CI_REGISTRY}" RNAME="$PRO" } git_url = scm.userRemoteConfigs[0].url repositoryUrl = sh (script:"echo ${git_url} | sed 's/xiaozi-cicd/${projectname}/g'", returnStdout:true).trim() CI_PROJECT_NAME = sh (script:"echo ${repositoryUrl} | awk -F '/' '{print \$NF}' | awk -F '.' '{print \$1}'", returnStdout:true).trim() echo "CI_PROJECT_NAME: ${CI_PROJECT_NAME}" if("${CI_PROJECT_NAME}"=="xiaozi-sso"){ CI_PROJECT_NAME="xiaozi-sso-auth" } if("${CI_PROJECT_NAME}"=="xiaozi-maintain-org"){ CI_PROJECT_NAME="xiaozi-xmo" } if("${CI_PROJECT_NAME}"=="xiaozi-maintain-business"){ CI_PROJECT_NAME="xiaozi-xmb" } } echo "当前项目为:${CI_PROJECT_NAME}" echo "当前giturl为:${repositoryUrl}" git branch: "${GIT_BRANCH}", credentialsId: '6ead0218-4984-4102-81e5-3b15d7c932e5', url: "${repositoryUrl}" script{ container('maven') { if( "${CI_PROJECT_NAME}"=="xiaozi-gateway" || "${CI_PROJECT_NAME}"=="xiaozi-open-gateway" || "${CI_PROJECT_NAME}"=="xiaozi-maintain-gateway" || "${CI_PROJECT_NAME}"=="xiaozi-seller-gateway" ) { sh "cd ${WORKSPACE}" sh "${BUILD_SHELL}" }else if("${CI_PROJECT_NAME}"=="xxl-job-executor" || "${CI_PROJECT_NAME}"=="xxl-job-admin"){ sh "cd ${WORKSPACE}" sh "${BUILD_SHELL}" }else if("${CI_PROJECT_NAME}"=="xiaozi-sso-auth"){ sh "${WORKSPACE}/$CI_PROJECT_NAME-server" sh "${BUILD_SHELL}" }else{ sh "cd ${WORKSPACE}/${CI_PROJECT_NAME}-client" sh "${BUILD_RELY_SHELL}" sh "cd ${WORKSPACE}/${CI_PROJECT_NAME}-dto" sh "${BUILD_RELY_SHELL}" sh "cd ${WORKSPACE}/${CI_PROJECT_NAME}-server" sh "${BUILD_SHELL}" } } } } } stage('Test'){ steps { script{ sh "echo ${CI_PROJECT_NAME}" container('maven') { if( "${CI_PROJECT_NAME}"=="xiaozi-gateway" || "${CI_PROJECT_NAME}"=="xiaozi-open-gateway" || "${CI_PROJECT_NAME}"=="xiaozi-maintain-gateway" || "${CI_PROJECT_NAME}"=="xiaozi-seller-gateway" ) { sh "cd ${WORKSPACE}" sh "${TEST_SHELL}" }else if("${CI_PROJECT_NAME}"=="xxl-job-executor" || "${CI_PROJECT_NAME}"=="xxl-job-admin"){ sh "cd ${WORKSPACE}" sh "${TEST_SHELL}" }else if("${CI_PROJECT_NAME}"=="xiaozi-sso"){ sh "${WORKSPACE}/$CI_PROJECT_NAME-server" sh "${TEST_SHELL}" }else{ sh "cd ${WORKSPACE}/${CI_PROJECT_NAME}-server" sh "${TEST_SHELL}" } } } } } stage('Maven') { steps{ script{ container('maven') { sh 'java -version' sh 'ls /usr/local/apache-maven-3.8.6/conf/' sh 'mvn -v' } } } } stage('Docker') { steps{ script{ if ("$PRO" =~ "app*"){ MIRRORING="${HARBOR_CI_REGISTRY}" ENVS="pro" } echo "当前环境:$ENVS" echo "项目名称:${CI_PROJECT_NAME}" CI_COMMIT_SHORT_SHA = sh (script:"echo ${CI_COMMIT_SHORT_SHA} | tr 'A-Z' 'a-z' | cut -c5-", returnStdout:true).trim() container('docker') { sh """ ls ${WORKSPACE}/${CI_PROJECT_NAME}-server/ ls ${WORKSPACE}/${CI_PROJECT_NAME}-server/target/ cd ${WORKSPACE}/${CI_PROJECT_NAME}-server/ cp -rf /usr/share/zoneinfo/Asia/Shanghai ./ echo ${MIRRORING}/xiaozi-$ENVS/$CI_PROJECT_NAME:$CI_COMMIT_SHORT_SHA docker build --build-arg PPIP=$PINPOINT -t $MIRRORING/xiaozi-$ENVS/$CI_PROJECT_NAME:$CI_COMMIT_SHORT_SHA . if [[ "$PRO" =~ "app" ]];then docker login -u $HARBOR_CI_REGISTRY_USER -p $HARBOR_CI_REGISTRY_PASSWORD $HARBOR_CI_REGISTRY; fi docker push $MIRRORING/xiaozi-$ENVS/$CI_PROJECT_NAME:$CI_COMMIT_SHORT_SHA docker rmi ${MIRRORING}/xiaozi-$ENVS/$CI_PROJECT_NAME:$CI_COMMIT_SHORT_SHA """ } } } } stage('Kubectl') { steps{ script{ container('kubectl') { sh 'pwd' sh 'kubectl get nodes' sh "kubectl set image deployment ${CI_PROJECT_NAME} ${CI_PROJECT_NAME}=${MIRRORING}/xiaozi-$ENVS/$CI_PROJECT_NAME:$CI_COMMIT_SHORT_SHA -n xiaozi-${PRO}" } } } } } } |

Jenkins常见错误参考

|

1 2 |

https://jiuaidu.com/jianzhan/1024917/ 感谢大佬 |

镜像jdk-maven-docker-ansible-nodejs-python3.6

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

FROM centos:7.9.2009 LABEL maintainer="shooter<tuobalongshen@126.com>" ADD jdk-8u231-linux-x64.tar.gz /usr/local ADD apache-maven-3.8.1.tar.gz /usr/local ENV JAVA_HOME=/usr/local/jdk1.8.0_231 ENV MAVEN_HOME=/usr/local/apache-maven-3.8.1 ENV CLASSPATH=.:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib/dt.jar ENV PATH=$JAVA_HOME/bin:$MAVEN_HOME/bin:$PATH RUN ln -s $JAVA_HOME/bin/java /usr/bin/java RUN ln -s $MAVEN_HOME/bin/mvn /usr/bin/mvn RUN ln -s touch $MAVEN_HOME/maven-repertory RUN yum install -y wget RUN mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo_bak RUN wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo RUN yum makecache RUN yum install -y iproute RUN yum install -y yum-utils device-mapper-persistent-data lvm2 RUN yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo RUN yum install -y docker-ce-20.10.9-3.el7 COPY ./docker-compose /usr/local/bin RUN chmod +x /usr/local/bin/docker-compose RUN systemctl enable docker RUN yum install -y https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm RUN yum install ansible -y RUN yum install -y nodejs RUN node -v RUN yum install -y python36 RUN pip3.6 install requests RUN echo "export PYTHONIOENCODING=UTF-8" >> /etc/profile WORKDIR /root |

- 本文固定链接: https://www.yoyoask.com/?p=9572

- 转载请注明: shooter 于 SHOOTER 发表