RockyLinux镜像下载地址,节点系统安装此处省略。自行解决

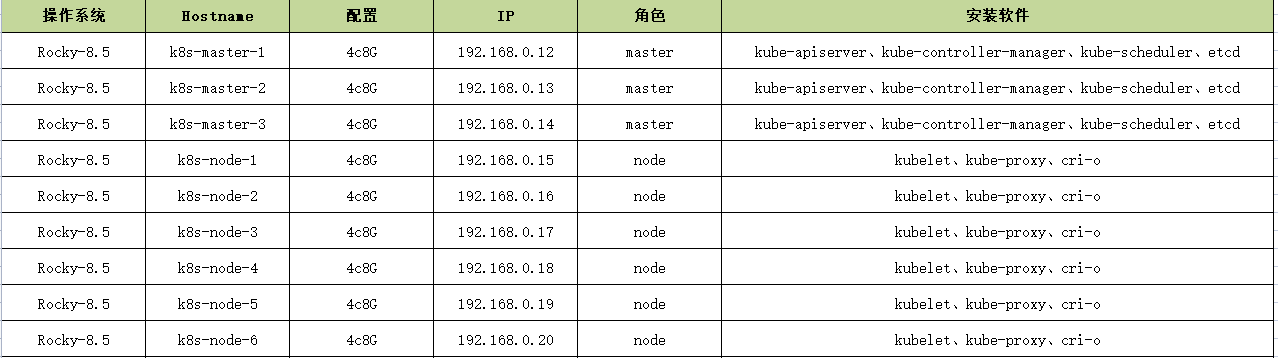

1、集群规划

|

1 2 3 4 |

控制节点/配置生成节点: qist:192.168.0.151 工作目录: /opt |

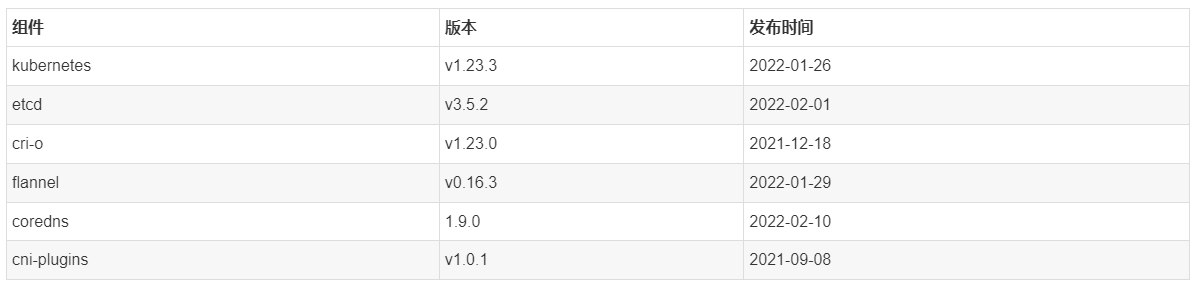

1.1 主要组件版本

1.2 主要配置策略

kube-apiserver:

- 使用节点本地 nginx 4 层透明代理实现高可用;

- 关闭非安全端口 8080 和匿名访问;

- 在安全端口 5443 接收 https 请求;

- 严格的认证和授权策略 (x509、token、RBAC);

- 开启 bootstrap token 认证,支持 kubelet TLS bootstrapping;

- 使用 https 访问 kubelet、etcd,加密通信;

kube-controller-manager:

- 3 节点高可用;

- 关闭非安全端口,在安全端口 10257 接收 https 请求;

- 使用 kubeconfig 访问 apiserver 的安全端口;

- 自动 approve kubelet 证书签名请求 (CSR),证书过期后自动轮转;

- 各 controller 使用自己的 ServiceAccount 访问 apiserver;

kube-scheduler:

- 3 节点高可用;

- 使用 kubeconfig 访问 apiserver 的安全端口;

- 关闭非安全端口,在安全端口 10259 接收 https 请求;

kubelet:

- 使用 kubeadm 动态创建 bootstrap token,而不是在 apiserver 中静态配置;

- 使用 TLS bootstrap 机制自动生成 client 和 server 证书,过期后自动轮转;

- 在 KubeletConfiguration 类型的 JSON 文件配置主要参数;

- 关闭只读端口,在安全端口 10250 接收 https 请求,对请求进行认证和授权,拒绝匿名访问和非授权访问;

- 使用 kubeconfig 访问 apiserver 的安全端口;

kube-proxy:

- 使用 kubeconfig 访问 apiserver 的安全端口;

- 使用 ipvs 代理模式;

集群插件:

- DNS:使用功能、性能更好的 coredns;

2、初始化系统环境和全局变量(所有节点)

2.1 注意:由于资源不足。本文使用12,13,14 这三台机器混合部署本文档的 etcd,master集群和 woker 集群

|

1 |

如果没有特殊说明,需要在所有节点上执行本文档的初始化操作 |

2.2 kubelet cri-o cgroup

|

1 2 |

Cgroup Driver:systemd kubeelt cri-o Cgroup 配置为systemd |

2.3 设置主机名

|

1 2 3 4 5 6 7 |

hostnamectl set-hostname k8s-master-1 hostnamectl set-hostname k8s-master-2 hostnamectl set-hostname k8s-master-3 ... ... 其他节点如是 退出,重新登录 root 账号,可以看到主机名生效。 |

2.4 添加节点信任关系

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

#本操作只需要在 qist 节点上进行,设置 root 账户无密码登录所有节点: ssh-keygen -t rsa #用ssh-copy-id将公钥复制到远程机器中, ssh-copy-id root@192.168.0.12 ssh-copy-id root@192.168.0.13 ssh-copy-id root@192.168.0.14 ssh-copy-id root@192.168.0.x ssh-copy-id root@192.168.0.x ssh-copy-id root@192.168.0.x ssh-copy-id root@192.168.0.x 注意: ssh-copy-id 将key写到远程机器的 ~/ .ssh/authorized_key.文件中,第一次需要密码登录 |

2.5 安装依赖包

|

1 2 3 |

yum install -y epel-release yum install -y chrony conntrack ipvsadm ipset jq iptables curl sysstat libseccomp wget socat git |

2.6 关闭防火墙

关闭防火墙,清理防火墙规则,设置默认转发策略:

|

1 2 3 |

systemctl stop firewalld systemctl disable firewalld iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat |

2.7 关闭 swap 分区

关闭 swap 分区,否则kubelet 会启动失败(可以设置 kubelet 启动参数 –fail-swap-on 为 false 关闭 swap 检查):

|

1 2 |

swapoff -a sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab |

2.8 关闭 SELinux

关闭 SELinux,否则 kubelet 挂载目录时可能报错 Permission denied :

|

1 2 |

setenforce 0 sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config |

2.9 优化内核参数

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 |

cat > /etc/sysctl.d/kubernetes.conf <<EOF net.ipv4.tcp_slow_start_after_idle=0 net.core.rmem_max=16777216 fs.inotify.max_user_watches=1048576 kernel.softlockup_all_cpu_backtrace=1 kernel.softlockup_panic=1 fs.file-max=2097152 fs.nr_open=2097152 fs.inotify.max_user_instances=8192 fs.inotify.max_queued_events=16384 vm.max_map_count=262144 net.core.netdev_max_backlog=16384 net.ipv4.tcp_wmem=4096 12582912 16777216 net.core.wmem_max=16777216 net.core.somaxconn=32768 net.ipv4.ip_forward=1 net.ipv4.tcp_max_syn_backlog=8096 net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1 net.bridge.bridge-nf-call-arptables=1 net.ipv4.tcp_rmem=4096 12582912 16777216 vm.swappiness=0 kernel.sysrq=1 net.ipv4.neigh.default.gc_stale_time=120 net.ipv4.conf.all.rp_filter=0 net.ipv4.conf.default.rp_filter=0 net.ipv4.conf.default.arp_announce=2 net.ipv4.conf.lo.arp_announce=2 net.ipv4.conf.all.arp_announce=2 net.ipv4.tcp_max_tw_buckets=5000 net.ipv4.tcp_syncookies=1 net.ipv4.tcp_synack_retries=2 net.ipv6.conf.lo.disable_ipv6=1 net.ipv6.conf.all.disable_ipv6=1 net.ipv6.conf.default.disable_ipv6=1 net.ipv6.conf.all.forwarding=0 net.ipv4.ip_local_port_range=1024 65535 net.ipv4.tcp_keepalive_time=600 net.ipv4.tcp_keepalive_probes=10 net.ipv4.tcp_keepalive_intvl=30 net.nf_conntrack_max=25000000 net.netfilter.nf_conntrack_max=25000000 net.netfilter.nf_conntrack_tcp_timeout_established=180 net.netfilter.nf_conntrack_tcp_timeout_time_wait=120 net.netfilter.nf_conntrack_tcp_timeout_close_wait=60 net.netfilter.nf_conntrack_tcp_timeout_fin_wait=12 net.ipv4.tcp_timestamps=0 net.ipv4.tcp_orphan_retries=3 fs.may_detach_mounts=1 kernel.pid_max=4194303 net.ipv4.tcp_tw_reuse=1 net.ipv4.tcp_fin_timeout=1 vm.min_free_kbytes=262144 kernel.msgmnb=65535 kernel.msgmax=65535 kernel.shmmax=68719476736 kernel.shmall=4294967296 kernel.core_uses_pid=1 net.ipv4.neigh.default.gc_thresh1=0 net.ipv4.neigh.default.gc_thresh2=4096 net.ipv4.neigh.default.gc_thresh3=8192 net.netfilter.nf_conntrack_tcp_timeout_close=3 net.ipv4.conf.all.route_localnet=1 EOF |

|

1 |

sysctl -p /etc/sysctl.d/kubernetes.conf |

|

1 2 |

关闭 tcp_tw_recycle,否则与 NAT 冲突,可能导致服务不通; 内核低于4版本添加 fs.may_detach_mounts=1 |

2.10 系统文件打开数

|

1 2 3 4 5 6 7 8 |

cat >> /etc/security/limits.conf <<EOF * soft nofile 655350 * hard nofile 655350 * soft nproc 655350 * hard nproc 655350 * soft core unlimited * hard core unlimited EOF |

如果是centos7还需修改

|

1 |

sed -i 's/4096/655350/' /etc/security/limits.d/20-nproc.conf |

2.11 内核模块配置重启自动加载

加载ipvs内核模块

|

1 2 3 4 5 6 |

cat > /etc/modules-load.d/k8s-ipvs-modules.conf <<EOF ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh EOF |

加载netfilter等模块

内核4版本以下 nf_conntrack 替换 nf_conntrack_ipv4

|

1 2 3 4 |

cat > /etc/modules-load.d/k8s-net-modules.conf <<EOF br_netfilter nf_conntrack EOF |

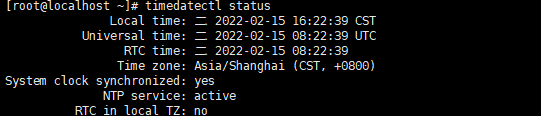

2.12 设置系统时区

|

1 |

timedatectl set-timezone Asia/Shanghai |

2.13 设置系统时钟同步

|

1 2 |

systemctl enable chronyd systemctl start chronyd |

查看同步状态:

|

1 |

timedatectl status |

输出:

|

1 2 3 |

System clock synchronized: yes NTP service: active RTC in local TZ: no |

- System clock synchronized: yes,表示时钟已同步;

- NTP service: active,表示开启了时钟同步服务;

|

1 2 3 4 5 6 |

# 将当前的 UTC 时间写入硬件时钟 timedatectl set-local-rtc 0 # 重启依赖于系统时间的服务 systemctl restart rsyslog systemctl restart crond |

2.14 关闭无关的服务

|

1 |

systemctl stop postfix && systemctl disable postfix |

2.15 创建相关目录

- master 组件目录(3个主节点可以以此创建)

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# k8s 目录 mkdir -p /apps/k8s/{bin,log,conf,ssl,config} mkdir -p /apps/work/kubernetes/{manifests,kubelet} mkdir -p /var/lib/kubelet mkdir -p /usr/libexec/kubernetes/kubelet-plugins/volume/exec/ mkdir -p /apps/k8s/ssl/{etcd,k8s} #etcd 目录 mkdir -p /apps/etcd/{bin,conf,data,ssl} #etcd data-dir 目录 mkdir -p /apps/etcd/data/default.etcd # etcd wal-dir 目录 mkdir -p /apps/etcd/data/default.etcd/wal |

- node 节点目录 (3个node点可以以此创建)

|

1 2 3 4 |

mkdir -p /apps/k8s/{bin,log,conf,ssl} mkdir -p /apps/work/kubernetes/{manifests,kubelet} mkdir -p /var/lib/kubelet mkdir -p /usr/libexec/kubernetes/kubelet-plugins/volume/exec/ |

- cri-o 目录结构创建 (3个node点可以以此创建)

|

1 2 3 4 5 6 7 |

mkdir -p /apps/crio/{run,etc,keys} mkdir -p /apps/crio/containers/oci/hooks.d mkdir -p /etc/containers mkdir -p /var/lib/containers/storage mkdir -p /run/containers/storage mkdir -p /apps/crio/lib/containers/storage mkdir -p /apps/crio/run/containers/storage |

2.16 mount目录挂载 (3个node点可以以此创建)把创建目录挂给这些插件的默认存储路径,相当于做了一个软连接。

- 挂载kubelet 跟cri-o数据目录最大兼容其它依赖组件例如csi插件

|

1 2 3 4 5 6 7 8 |

cat >> /etc/fstab <<EOF /apps/work/kubernetes/kubelet /var/lib/kubelet none defaults,bind,nofail 0 0 /apps/crio/lib/containers/storage /var/lib/containers/storage none defaults,bind,nofail 0 0 /apps/crio/run/containers/storage /run/containers/storage none defaults,bind,nofail 0 0 EOF #注意: 如果有大一点的分区,就挂载到大点的分区路径 |

- 验证挂载是否有误

|

1 |

mount -a |

重启机器:

|

1 2 |

sync reboot |

3、创建 CA 根证书和秘钥

|

1 2 3 4 5 6 7 8 9 |

为确保安全,kubernetes 系统各组件需要使用 x509 证书对通信进行加密和认证。 CA (Certificate Authority) 是自签名的根证书,用来签名后续创建的其它证书。 CA 证书是集群所有节点共享的,只需要创建一次,后续用它签名其它所有证书。 本文档使用 CloudFlare 的 PKI 工具集 cfssl 创建所有证书。 注意:如果没有特殊指明,本文档的所有操作均在 qist 节点上执行。 |

3.1 安装 cfssl 工具集

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

mkdir -p /opt/k8s/bin wget https://github.com/cloudflare/cfssl/releases/download/v1.4.1/cfssl_1.4.1_linux_amd64 mv cfssl_1.4.1_linux_amd64 /opt/k8s/bin/cfssl wget https://github.com/cloudflare/cfssl/releases/download/v1.4.1/cfssljson_1.4.1_linux_amd64 mv cfssljson_1.4.1_linux_amd64 /opt/k8s/bin/cfssljson wget https://github.com/cloudflare/cfssl/releases/download/v1.4.1/cfssl-certinfo_1.4.1_linux_amd64 mv cfssl-certinfo_1.4.1_linux_amd64 /opt/k8s/bin/cfssl-certinfo chmod +x /opt/k8s/bin/* export PATH=/opt/k8s/bin:$PATH |

3.2 创建配置文件

CA 配置文件用于配置根证书的使用场景 (profile) 和具体参数 (usage,过期时间、服务端认证、客户端认证、加密等):

创建etcd K8S的 ca 证书目录

|

1 2 3 4 |

mkdir -p /opt/k8s/cfssl/{etcd,k8s} mkdir -p /opt/k8s/cfssl/pki/{etcd,k8s} # 创建工作目录 mkdir -p /opt/k8s/work |

全局 配置文件生成

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

cd /opt/k8s/work cat > /opt/k8s/cfssl/ca-config.json <<EOF { "signing": { "default": { "expiry": "876000h" }, "profiles": { "kubernetes": { "usages": [ "signing", "key encipherment", "server auth", "client auth" ], "expiry": "876000h" } } } } EOF |

- signing:表示该证书可用于签名其它证书(生成的 ca.pem 证书中 CA=TRUE);

- server auth:表示 client 可以用该该证书对 server 提供的证书进行验证;

- client auth:表示 server 可以用该该证书对 client 提供的证书进行验证;

- “expiry”: “876000h”:证书有效期设置为 100 年;

3.3 创建证书签名请求文件

- etcd 证书签名请求文件

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

cd /opt/k8s/work cat > /opt/k8s/cfssl/etcd/etcd-ca-csr.json <<EOF { "CN": "etcd", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Shanghai", "L": "Shanghai", "O": "k8s", "OU": "Shooter" } ], "ca": { "expiry": "876000h" } } EOF |

- k8s ca证书签名请求文件(ca-csr.json)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

cd /opt/k8s/work cat > /opt/k8s/cfssl/k8s/k8s-ca-csr.json <<EOF { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Shanghai", "L": "Shanghai", "O": "k8s", "OU": "Shooter" } ], "ca": { "expiry": "876000h" } } EOF |

- CN:Common Name:kube-apiserver 从证书中提取该字段作为请求的用户名 (User Name),浏览器使用该字段验证网站是否合法;

- O:Organization:kube-apiserver 从证书中提取该字段作为请求用户所属的组 (Group);

- kube-apiserver 将提取的 User、Group 作为 RBAC 授权的用户标识;

|

1 2 3 |

注意: 不同证书 csr 文件的 CN、C、ST、L、O、OU 组合必须不同,否则可能出现 PEER'S CERTIFICATE HAS AN INVALID SIGNATURE 错误; 后续创建证书的 csr 文件时,CN 都不相同(C、ST、L、O、OU 相同),以达到区分的目的; |

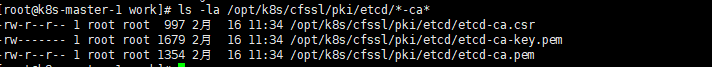

3.4 生成 CA 证书和私钥

生成 etcd CA 证书和私钥

|

1 2 3 4 5 6 |

cd /opt/k8s/work cfssl gencert -initca /opt/k8s/cfssl/etcd/etcd-ca-csr.json | \ cfssljson -bare /opt/k8s/cfssl/pki/etcd/etcd-ca ls -la /opt/k8s/cfssl/pki/etcd/*-ca* |

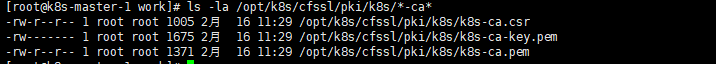

生成 kubernetes CA 证书和私钥

|

1 2 3 4 5 6 |

cd /opt/k8s/work cfssl gencert -initca /opt/k8s/cfssl/k8s/k8s-ca-csr.json | \ cfssljson -bare /opt/k8s/cfssl/pki/k8s/k8s-ca ls -la /opt/k8s/cfssl/pki/k8s/*-ca* |

3.5 分发CA证书文件

etcd ca 证书分发

|

1 2 3 4 5 6 |

cd /opt/k8s/work scp -r /opt/k8s/cfssl/pki/etcd/etcd-ca* root@192.168.0.12:/apps/etcd/ssl scp -r /opt/k8s/cfssl/pki/etcd/etcd-ca* root@192.168.0.13:/apps/etcd/ssl scp -r /opt/k8s/cfssl/pki/etcd/etcd-ca* root@192.168.0.14:/apps/etcd/ssl |

kubernetes ca 证书分发

|

1 2 3 4 5 6 7 8 9 |

# k8s 连接etcd 使用得ca 证书 scp -r /opt/k8s/cfssl/pki/etcd/etcd-ca.pem root@192.168.0.12:/apps/k8s/ssl/etcd/ scp -r /opt/k8s/cfssl/pki/etcd/etcd-ca.pem root@192.168.0.13:/apps/k8s/ssl/etcd/ scp -r /opt/k8s/cfssl/pki/etcd/etcd-ca.pem root@192.168.0.14:/apps/k8s/ssl/etcd/ # K8S 集群ca 证书 scp -r /opt/k8s/cfssl/pki/k8s/k8s-ca* root@192.168.0.12:/apps/k8s/ssl/k8s scp -r /opt/k8s/cfssl/pki/k8s/k8s-ca* root@192.168.0.13:/apps/k8s/ssl/k8s scp -r /opt/k8s/cfssl/pki/k8s/k8s-ca* root@192.168.0.14:/apps/k8s/ssl/k8s |

4、安装和配置 kubectl

本文档介绍安装和配置 kubernetes 命令行管理工具 kubectl 的步骤。

|

1 2 3 |

注意: 如果没有特殊指明,本文档的所有操作均在 qist 节点上执行; 本文档只需要部署一次,生成的 kubeconfig 文件是通用的,可以拷贝到需要执行 kubectl 命令的机器的 ~/.kube/config 位置 |

4.1 下载和分发 kubectl 二进制文件

|

1 2 3 4 5 6 |

cd /root/ruanjian/ wget https://dl.k8s.io/v1.23.3/kubernetes-client-linux-amd64.tar.gz # 自行解决翻墙下载问题 tar -xzvf kubernetes-client-linux-amd64.tar.gz #也可以下载所有组件包(推荐) https://dl.k8s.io/v1.23.3/kubernetes-server-linux-amd64.tar.gz tar -xzvf kubernetes-server-linux-amd64.tar.gz |

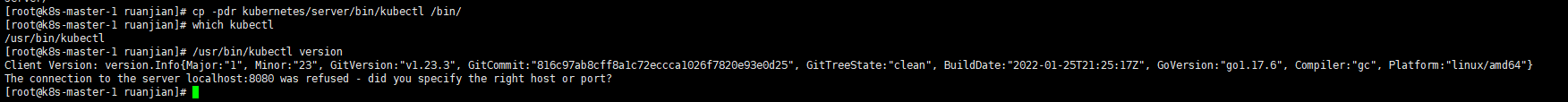

分发到所有使用 kubectl 工具的节点:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

cd /root/ruanjian cp -pdr kubernetes/server/bin/kubectl /bin/ which kubectl /usr/bin/kubectl /usr/bin/kubectl version scp -r kubernetes/server/bin/kubectl root@192.168.0.12:/bin/ scp -r kubernetes/server/bin/kubectl root@192.168.0.13:/bin/ scp -r kubernetes/server/bin/kubectl root@192.168.0.14:/bin/ |

4.2 创建 admin 证书和私钥

kubectl 使用 https 协议与 kube-apiserver 进行安全通信,kube-apiserver 对 kubectl 请求包含的证书进行认证和授权。

kubectl 后续用于集群管理,所以这里创建具有最高权限的 admin 证书。

创建证书签名请求:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

cd /opt/k8s/work cat > /opt/k8s/cfssl/k8s/k8s-apiserver-admin.json << EOF { "CN": "admin", "hosts": [""], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Shanghai", "L": "Shanghai", "O": "system:masters", "OU": "Kubernetes-manual" } ] } EOF |

- O: system:masters:kube-apiserver 收到使用该证书的客户端请求后,为请求添加组(Group)认证标识 system:masters;

- 预定义的 ClusterRoleBinding cluster-admin 将 Group system:masters 与 Role cluster-admin 绑定,该 Role 授予操作集群所需的最高权限;

- 该证书只会被 kubectl 当做 client 证书使用,所以 hosts 字段为空;

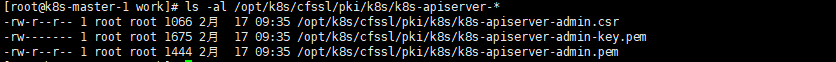

生成证书和私钥:

|

1 2 3 4 5 6 7 8 9 10 |

cd /opt/k8s/work cfssl gencert -ca=/opt/k8s/cfssl/pki/k8s/k8s-ca.pem \ -ca-key=/opt/k8s/cfssl/pki/k8s/k8s-ca-key.pem \ -config=/opt/k8s/cfssl/ca-config.json \ -profile=kubernetes \ /opt/k8s/cfssl/k8s/k8s-apiserver-admin.json | \ cfssljson -bare /opt/k8s/cfssl/pki/k8s/k8s-apiserver-admin ls -al /opt/k8s/cfssl/pki/k8s/k8s-apiserver-* |

4.3 创建 kubeconfig 文件

|

1 |

kubectl 使用 kubeconfig 文件访问 apiserver,该文件包含 kube-apiserver 的地址和认证信息(CA 证书和客户端证书): |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

mkdir -p /opt/k8s/kubeconfig cd /opt/k8s/work # 设置集群参数 kubectl config set-cluster kubernetes \ --certificate-authority=/opt/k8s/cfssl/pki/k8s/k8s-ca.pem \ --embed-certs=true \ --server=https://192.168.0.12:5443 \ --kubeconfig=/opt/k8s/kubeconfig/admin.kubeconfig # 设置客户端认证参数 kubectl config set-credentials admin \ --client-certificate=/opt/k8s/cfssl/pki/k8s/k8s-apiserver-admin.pem \ --client-key=/opt/k8s/cfssl/pki/k8s/k8s-apiserver-admin-key.pem \ --embed-certs=true \ --kubeconfig=/opt/k8s/kubeconfig/admin.kubeconfig # 设置上下文参数 kubectl config set-context kubernetes \ --cluster=kubernetes \ --user=admin \ --namespace=kube-system \ --kubeconfig=/opt/k8s/kubeconfig/admin.kubeconfig # 设置默认上下文 kubectl config use-context kubernetes --kubeconfig=/opt/k8s/kubeconfig/admin.kubeconfig |

- –certificate-authority:验证 kube-apiserver 证书的根证书;

- –client-certificate、–client-key:刚生成的 admin 证书和私钥,与 kube-apiserver https 通信时使用;

- –embed-certs=true:将 ca.pem 和 admin.pem 证书内容嵌入到生成的 kubectl.kubeconfig 文件中(否则,写入的是证书文件路径,后续拷贝 kubeconfig 到其它机器时,还需要单独拷贝证书文件,不方便。);

- –server:指定 kube-apiserver 的地址,这里指向第一个节点上的服务;

4.4 分发 kubeconfig 文件

分发到所有使用 kubectl 命令的节点:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

ssh root@192.168.0.12 mkdir -p ~/.kube (所有使用的节点创建) ssh root@192.168.0.13 mkdir -p ~/.kube ssh root@192.168.0.14 mkdir -p ~/.kube ssh root@192.168.0.12 mkdir -p /opt/k8s/kubeconfig/ ssh root@192.168.0.13 mkdir -p /opt/k8s/kubeconfig/ ssh root@192.168.0.14 mkdir -p /opt/k8s/kubeconfig/ cd /opt/k8s/work cp -pdr /opt/k8s/kubeconfig/admin.kubeconfig ~/.kube/config #分发 scp -r /opt/k8s/kubeconfig/admin.kubeconfig root@192.168.0.12:~/.kube/config scp -r /opt/k8s/kubeconfig/admin.kubeconfig root@192.168.0.13:~/.kube/config scp -r /opt/k8s/kubeconfig/admin.kubeconfig root@192.168.0.14:~/.kube/config #分发配置文件 scp -r /opt/k8s/kubeconfig/admin.kubeconfig root@192.168.0.12:/opt/k8s/kubeconfig/admin.kubeconfig scp -r /opt/k8s/kubeconfig/admin.kubeconfig root@192.168.0.13:/opt/k8s/kubeconfig/admin.kubeconfig scp -r /opt/k8s/kubeconfig/admin.kubeconfig root@192.168.0.14:/opt/k8s/kubeconfig/admin.kubeconfig # 也可以使用环境变量 export KUBECONFIG=/opt/k8s/kubeconfig/admin.kubeconfig |

5、部署 etcd 集群

etcd 是基于 Raft 的分布式 KV 存储系统,由 CoreOS 开发,常用于服务发现、共享配置以及并发控制(如 leader 选举、分布式锁等)。

kubernetes 使用 etcd 集群持久化存储所有 API 对象、运行数据。

本文档介绍部署一个三节点高可用 etcd 集群的步骤:

- 下载和分发 etcd 二进制文件;

- 创建 etcd 集群各节点的 x509 证书,用于加密客户端(如 etcdctl) 与 etcd 集群、etcd 集群之间的通信;

- 创建 etcd 的 systemd unit 文件,配置服务参数;

- 检查集群工作状态;

etcd 集群节点名称和 IP 如下:

- k8s-master-1:192.168.0.12

- k8s-master-2:192.168.0.13

- k8s-master-3:192.168.0.14

|

1 |

注意:如果没有特殊指明,本文档的所有操作均在qist 节点上执行; |

5.1 下载和分发 etcd 二进制文件

到 etcd 的 release 页面 下载最新版本的发布包:

|

1 2 3 |

cd /opt/k8s/work wget https://github.com/etcd-io/etcd/releases/download/v3.5.2/etcd-v3.5.2-linux-amd64.tar.gz tar -xvf tcd-v3.5.2-linux-amd64.tar.gz |

分发二进制文件到集群所有节点:

|

1 2 3 4 |

cd /opt/k8s/work scp -r etcd-v3.5.2-linux-amd64/etcd* root@192.168.0.12:/apps/etcd/bin scp -r etcd-v3.5.2-linux-amd64/etcd* root@192.168.0.13:/apps/etcd/bin scp -r etcd-v3.5.2-linux-amd64/etcd* root@192.168.0.14:/apps/etcd/bin |

5.2 创建 etcd 证书和私钥

- 创建etcd服务证书

- 创建证书签名请求:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

cat > /opt/k8s/cfssl/etcd/etcd-server.json << EOF { "CN": "etcd", "hosts": [ "127.0.0.1", "192.168.0.12","192.168.0.13","192.168.0.14", "k8s-master-1","k8s-master-2","k8s-master-3" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Shanghai", "L": "Shanghai", "O": "k8s", "OU": "Shooter" } ] } EOF |

生成证书和私钥:

|

1 2 3 4 5 6 7 |

cfssl gencert \ -ca=/opt/k8s/cfssl/pki/etcd/etcd-ca.pem \ -ca-key=/opt/k8s/cfssl/pki/etcd/etcd-ca-key.pem \ -config=/opt/k8s/cfssl/ca-config.json \ -profile=kubernetes \ /opt/k8s/cfssl/etcd/etcd-server.json | \ cfssljson -bare /opt/k8s/cfssl/pki/etcd/etcd-server |

创建etcd节点证书

192.168.0.12节点

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

cat > /opt/k8s/cfssl/etcd/k8s-master-1.json << EOF { "CN": "etcd", "hosts": [ "127.0.0.1", "192.168.0.12", "k8s-master-1" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Shanghai", "L": "Shanghai", "O": "k8s", "OU": "Shooter" } ] } EOF |

生成证书和私钥:

|

1 2 3 4 5 6 7 |

cfssl gencert \ -ca=/opt/k8s/cfssl/pki/etcd/etcd-ca.pem \ -ca-key=/opt/k8s/cfssl/pki/etcd/etcd-ca-key.pem \ -config=/opt/k8s/cfssl/ca-config.json \ -profile=kubernetes \ /opt/k8s/cfssl/etcd/k8s-master-1.json | \ cfssljson -bare /opt/k8s/cfssl/pki/etcd/etcd-member-k8s-master-1 |

192.168.0.13节点

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

cat > /opt/k8s/cfssl/etcd/k8s-master-2.json << EOF { "CN": "etcd", "hosts": [ "127.0.0.1", "192.168.0.13", "k8s-master-2" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Shanghai", "L": "Shanghai", "O": "k8s", "OU": "Shooter" } ] } EOF |

生成证书和私钥:

|

1 2 3 4 5 6 7 |

cfssl gencert \ -ca=/opt/k8s/cfssl/pki/etcd/etcd-ca.pem \ -ca-key=/opt/k8s/cfssl/pki/etcd/etcd-ca-key.pem \ -config=/opt/k8s/cfssl/ca-config.json \ -profile=kubernetes \ /opt/k8s/cfssl/etcd/k8s-master-2.json | \ cfssljson -bare /opt/k8s/cfssl/pki/etcd/etcd-member-k8s-master-2 |

192.168.0.14节点

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

cat > /opt/k8s/cfssl/etcd/k8s-master-3.json << EOF { "CN": "etcd", "hosts": [ "127.0.0.1", "192.168.0.14", "k8s-master-3" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Shanghai", "L": "Shanghai", "O": "k8s", "OU": "Shooter" } ] } EOF |

生成证书和私钥:

|

1 2 3 4 5 6 7 |

cfssl gencert \ -ca=/opt/k8s/cfssl/pki/etcd/etcd-ca.pem \ -ca-key=/opt/k8s/cfssl/pki/etcd/etcd-ca-key.pem \ -config=/opt/k8s/cfssl/ca-config.json \ -profile=kubernetes \ /opt/k8s/cfssl/etcd/k8s-master-3.json | \ cfssljson -bare /opt/k8s/cfssl/pki/etcd/etcd-member-k8s-master-3 |

- 创建etcd client 证书

- 创建证书签名请求:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

cat > /opt/k8s/cfssl/etcd/etcd-client.json << EOF { "CN": "client", "hosts": [""], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Shanghai", "L": "Shanghai", "O": "k8s", "OU": "Shooter" } ] } EOF |

生成证书和私钥:

|

1 2 3 4 5 6 7 8 |

cfssl gencert \ -ca=/opt/k8s/cfssl/pki/etcd/etcd-ca.pem \ -ca-key=/opt/k8s/cfssl/pki/etcd/etcd-ca-key.pem \ -config=/opt/k8s/cfssl/ca-config.json \ -profile=kubernetes \ /opt/k8s/cfssl/etcd/etcd-client.json | \ cfssljson -bare /opt/k8s/cfssl/pki/etcd/etcd-client |

分发生成的证书和私钥到各 etcd 节点:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# 分发server 证书 scp -r /opt/k8s/cfssl/pki/etcd/etcd-server* root@192.168.0.12:/apps/etcd/ssl scp -r /opt/k8s/cfssl/pki/etcd/etcd-server* root@192.168.0.13:/apps/etcd/ssl scp -r /opt/k8s/cfssl/pki/etcd/etcd-server* root@192.168.0.14:/apps/etcd/ssl # 分发192.168.0.12 节点证书 scp -r /opt/k8s/cfssl/pki/etcd/etcd-member-k8s-master-1* root@192.168.0.12:/apps/etcd/ssl # 分发192.168.0.13 节点证书 scp -r /opt/k8s/cfssl/pki/etcd/etcd-member-k8s-master-2* root@192.168.0.13:/apps/etcd/ssl # 分发192.168.0.14 节点证书 scp -r /opt/k8s/cfssl/pki/etcd/etcd-member-k8s-master-3* root@192.168.0.14:/apps/etcd/ssl # 分发客户端证书到K8S master 节点 kube-apiserver 连接etcd 集群使用 scp -r /opt/k8s/cfssl/pki/etcd/etcd-client* root@192.168.0.12:/apps/k8s/ssl/etcd/ scp -r /opt/k8s/cfssl/pki/etcd/etcd-client* root@192.168.0.13:/apps/k8s/ssl/etcd/ scp -r /opt/k8s/cfssl/pki/etcd/etcd-client* root@192.168.0.14:/apps/k8s/ssl/etcd/ |

5.3 创建 etcd 启动参数配置文件

192.168.0.12节点,在k8s-master-1 节点上执行

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

cat > /apps/etcd/conf/etcd <<EOF ETCD_OPTS="--name=k8s-master-1 \ --data-dir=/apps/etcd/data/default.etcd \ --wal-dir=/apps/etcd/data/default.etcd/wal \ --listen-peer-urls=https://192.168.0.12:2380 \ --listen-client-urls=https://192.168.0.12:2379,https://127.0.0.1:2379 \ --advertise-client-urls=https://192.168.0.12:2379 \ --initial-advertise-peer-urls=https://192.168.0.12:2380 \ --initial-cluster=k8s-master-1=https://192.168.0.12:2380,k8s-master-2=https://192.168.0.13:2380,k8s-master-3=https://192.168.0.14:2380 \ --initial-cluster-token=k8s-cluster \ --initial-cluster-state=new \ --heartbeat-interval=6000 \ --election-timeout=30000 \ --snapshot-count=5000 \ --auto-compaction-retention=1 \ --max-request-bytes=33554432 \ --quota-backend-bytes=107374182400 \ --trusted-ca-file=/apps/etcd/ssl/etcd-ca.pem \ --cert-file=/apps/etcd/ssl/etcd-server.pem \ --key-file=/apps/etcd/ssl/etcd-server-key.pem \ --peer-cert-file=/apps/etcd/ssl/etcd-member-k8s-master-1.pem \ --peer-key-file=/apps/etcd/ssl/etcd-member-k8s-master-1-key.pem \ --peer-client-cert-auth \ --cipher-suites=TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_128_GCM_SHA256 \ --enable-v2=true \ --peer-trusted-ca-file=/apps/etcd/ssl/etcd-ca.pem" EOF |

192.168.0.13节点:在k8s-master-2 节点上执行

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

cat > /apps/etcd/conf/etcd <<EOF ETCD_OPTS="--name=k8s-master-2 \ --data-dir=/apps/etcd/data/default.etcd \ --wal-dir=/apps/etcd/data/default.etcd/wal \ --listen-peer-urls=https://192.168.0.13:2380 \ --listen-client-urls=https://192.168.0.13:2379,https://127.0.0.1:2379 \ --advertise-client-urls=https://192.168.0.13:2379 \ --initial-advertise-peer-urls=https://192.168.0.13:2380 \ --initial-cluster=k8s-master-1=https://192.168.0.12:2380,k8s-master-2=https://192.168.0.13:2380,k8s-master-3=https://192.168.0.14:2380 \ --initial-cluster-token=k8s-cluster \ --initial-cluster-state=new \ --heartbeat-interval=6000 \ --election-timeout=30000 \ --snapshot-count=5000 \ --auto-compaction-retention=1 \ --max-request-bytes=33554432 \ --quota-backend-bytes=107374182400 \ --trusted-ca-file=/apps/etcd/ssl/etcd-ca.pem \ --cert-file=/apps/etcd/ssl/etcd-server.pem \ --key-file=/apps/etcd/ssl/etcd-server-key.pem \ --peer-cert-file=/apps/etcd/ssl/etcd-member-k8s-master-2.pem \ --peer-key-file=/apps/etcd/ssl/etcd-member-k8s-master-2-key.pem \ --peer-client-cert-auth \ --cipher-suites=TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_128_GCM_SHA256 \ --enable-v2=true \ --peer-trusted-ca-file=/apps/etcd/ssl/etcd-ca.pem" EOF |

192.168.0.14节点:在k8s-master-3 节点上执行

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

cat > /apps/etcd/conf/etcd <<EOF ETCD_OPTS="--name=k8s-master-3 \ --data-dir=/apps/etcd/data/default.etcd \ --wal-dir=/apps/etcd/data/default.etcd/wal \ --listen-peer-urls=https://192.168.0.14:2380 \ --listen-client-urls=https://192.168.0.14:2379,https://127.0.0.1:2379 \ --advertise-client-urls=https://192.168.0.14:2379 \ --initial-advertise-peer-urls=https://192.168.0.14:2380 \ --initial-cluster=k8s-master-1=https://192.168.0.12:2380,k8s-master-2=https://192.168.0.13:2380,k8s-master-3=https://192.168.0.14:2380 \ --initial-cluster-token=k8s-cluster \ --initial-cluster-state=new \ --heartbeat-interval=6000 \ --election-timeout=30000 \ --snapshot-count=5000 \ --auto-compaction-retention=1 \ --max-request-bytes=33554432 \ --quota-backend-bytes=107374182400 \ --trusted-ca-file=/apps/etcd/ssl/etcd-ca.pem \ --cert-file=/apps/etcd/ssl/etcd-server.pem \ --key-file=/apps/etcd/ssl/etcd-server-key.pem \ --peer-cert-file=/apps/etcd/ssl/etcd-member-k8s-master-3.pem \ --peer-key-file=/apps/etcd/ssl/etcd-member-k8s-master-3-key.pem \ --peer-client-cert-auth \ --cipher-suites=TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_128_GCM_SHA256 \ --enable-v2=true \ --peer-trusted-ca-file=/apps/etcd/ssl/etcd-ca.pem" EOF |

5.4 创建 etcd 的 systemd unit 文件

k8s-master-1 k8s-master-2 k8s-master-3 节点上分别执行

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

cat > /usr/lib/systemd/system/etcd.service <<EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/etcd-io/etcd [Service] Type=notify LimitNOFILE=655350 LimitNPROC=655350 LimitCORE=infinity LimitMEMLOCK=infinity User=etcd Group=etcd WorkingDirectory=/apps/etcd/data/default.etcd EnvironmentFile=-/apps/etcd/conf/etcd ExecStart=/apps/etcd/bin/etcd \$ETCD_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF |

- WorkingDirectory、–data-dir:指定工作目录和数据目录为 ${ETCD_DATA_DIR},需在启动服务前创建这个目录;

- –wal-dir:指定 wal 目录,为了提高性能,一般使用 SSD 或者和 –data-dir 不同的磁盘;

- –name:指定节点名称,当 –initial-cluster-state 值为 new 时,–name 的参数值必须位于 –initial-cluster 列表中;

- –cert-file、–key-file:etcd server 与 client 通信时使用的证书和私钥;

- –trusted-ca-file:签名 client 证书的 CA 证书,用于验证 client 证书;

- –peer-cert-file、–peer-key-file:etcd 与 peer 通信使用的证书和私钥;

- –peer-trusted-ca-file:签名 peer 证书的 CA 证书,用于验证 peer 证书;

5.5 创建etcd 运行用户

在k8s-master-1 k8s-master-2 k8s-master-3 节点上分别执行

创建etcd用户

|

1 |

useradd etcd -s /sbin/nologin -M |

etcd 目录给用户权限

|

1 2 3 4 5 6 7 8 9 10 |

chown -R etcd:etcd /apps/etcd [root@k8s-master-3 ~]# ls -la /apps/etcd/ total 4 drwxr-xr-x 7 etcd etcd 64 Feb 10 20:32 . drwxr-xr-x. 8 root root 85 Aug 26 18:54 .. drwxr-xr-x 3 etcd etcd 117 Feb 10 20:28 bin drwxr-xr-x 2 etcd etcd 18 Feb 10 20:33 conf drwxr-xr-x 3 etcd etcd 26 Aug 26 12:57 data drwxr-xr-x 2 etcd etcd 4096 Aug 26 12:58 ssl |

5.6 启动 etcd 服务

k8s-master-1 k8s-master-2 k8s-master-3 节点上分别执行

|

1 2 3 4 5 6 7 8 |

# 全局刷新service systemctl daemon-reload # 设置etcd 开机启动 systemctl enable etcd # 启动etcd systemctl start etcd #重启etcd systemctl restart etcd |

- 必须先创建 etcd 数据目录和工作目录;

- etcd 进程首次启动时会等待其它节点的 etcd 加入集群,命令 systemctl start etcd 会卡住一段时间,为正常现象;

5.7 检查启动结果

k8s-master-1 k8s-master-2 k8s-master-3 节点上分别执行

|

1 2 3 4 5 6 7 8 9 10 11 |

systemctl status etcd|grep Active [root@k8s-master-1 work]# systemctl status etcd|grep Active Active: active (running) since Thu 2022-02-17 10:41:07 CST; 13s ago [root@k8s-master-2 .kube]# systemctl status etcd|grep Active Active: active (running) since Thu 2022-02-17 10:41:07 CST; 13s ago [root@k8s-master-3 work]# systemctl status etcd|grep Active Active: active (running) since Thu 2022-02-17 10:41:07 CST; 13s ago |

确保状态为 active (running),否则查看日志,确认原因:

|

1 |

journalctl -u etcd |

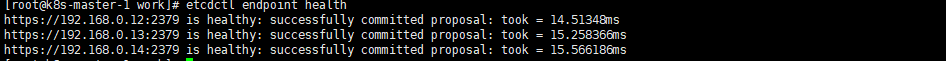

5.8 验证服务状态

部署完 etcd 集群后,在任一 etcd 节点上执行如下命令:

|

1 2 3 4 5 6 7 8 |

# 配置环境变量 vim /etc/profile export ETCDCTL_API=3 export ENDPOINTS=https://192.168.0.12:2379,https://192.168.0.13:2379,https://192.168.0.14:2379 alias etcdctl='/apps/etcd/bin/etcdctl --endpoints=${ENDPOINTS} --cacert=/apps/k8s/ssl/etcd/etcd-ca.pem --cert=/apps/k8s/ssl/etcd/etcd-client.pem --key=/apps/k8s/ssl/etcd/etcd-client-key.pem' |

- 3.5.2 版本的 etcd/etcdctl 默认启用了 V3 API,所以执行 etcdctl 命令时不需要再指定环境变量 ETCDCTL_API=3;

- 从 K8S 1.13 开始,不再支持 v2 版本的 etcd;

预期输出:

|

1 2 3 4 |

[root@k8s-master-1 etcd]# etcdctl endpoint health https://192.168.0.12:2379 is healthy: successfully committed proposal: took = 14.51348ms https://192.168.0.13:2379 is healthy: successfully committed proposal: took = 15.258366ms https://192.168.0.14:2379 is healthy: successfully committed proposal: took = 15.566186ms |

输出均为 healthy 时表示集群服务正常。

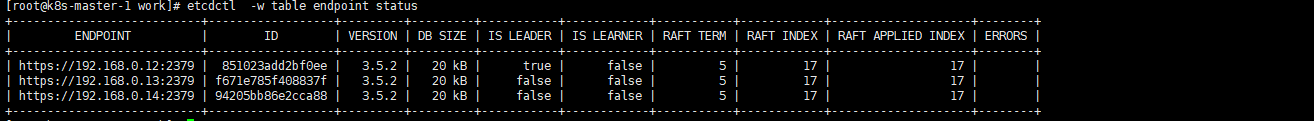

5.9 查看当前的 leader

|

1 |

etcdctl -w table endpoint status |

- 可见,当前的 leader 为 192.168.0.12

6、下载及分发 kubernetes server 二进制包

从 CHANGELOG 页面 下载二进制 tar 文件并解压:

|

1 2 3 |

cd /opt/k8s/work wget https://dl.k8s.io/v1.23.3/kubernetes-server-linux-amd64.tar.gz # 自行解决翻墙问题 tar -xzvf kubernetes-server-linux-amd64.tar.gz |

将二进制文件拷贝到所有 master 节点:

|

1 2 3 4 5 |

cd /opt/k8s/work scp -r kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-proxy,kube-scheduler,kubelet} root@192.168.0.12:/apps/k8s/bin/ scp -r kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-proxy,kube-scheduler,kubelet} root@192.168.0.13:/apps/k8s/bin/ scp -r kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-proxy,kube-scheduler,kubelet} root@192.168.0.14:/apps/k8s/bin/ |

将二进制文件拷贝到所有 node 节点:

|

1 2 3 4 5 |

cd /opt/k8s/work scp -r kubernetes/server/bin/{kube-proxy,kubelet} root@192.168.2.187:/apps/k8s/bin/ scp -r kubernetes/server/bin/{kube-proxy,kubelet} root@192.168.2.185:/apps/k8s/bin/ scp -r kubernetes/server/bin/{kube-proxy,kubelet} root@192.168.3.62:/apps/k8s/bin/ scp -r kubernetes/server/bin/{kube-proxy,kubelet} root@192.168.3.70:/apps/k8s/bin/ |

7、apiserver 高可用

|

1 |

注意:如果没有特殊指明,本文档的所有操作均在 qist 节点上执行。 |

7.1 高可用选型

- ipvs+keepalived

- nginx+keepalived

- haprxoy+keepalived

- 每个节点kubelet 启动静态pod nginx haprxoy

本文档选择每个节点kubelet 启动静态pod nginx haprxoy

7.2 构建nginx或者haproxy镜像

- nginx dockerfile

- haroxy dockerfile

7.3 生成kubelet 静态启动pod yaml

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

mkdir -p /opt/k8s/work/yaml cd /opt//k8s/work/yaml cat >/opt/k8s/work/yaml/kube-ha-proxy.yaml <<EOF apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: component: kube-apiserver-ha-proxy tier: control-plane annotations: prometheus.io/port: "8404" prometheus.io/scrape: "true" name: kube-apiserver-ha-proxy namespace: kube-system spec: containers: - args: - "CP_HOSTS=192.168.0.12,192.168.0.13,192.168.0.14" image: docker.io/juestnow/nginx-proxy:1.21.0 imagePullPolicy: IfNotPresent name: kube-apiserver-ha-proxy env: - name: CPU_NUM value: "4" - name: BACKEND_PORT value: "5443" - name: HOST_PORT value: "6443" - name: CP_HOSTS value: "192.168.0.12,192.168.0.13,192.168.0.14" hostNetwork: true priorityClassName: system-cluster-critical status: {} EOF |

参数说明:

- CPU_NUM:nginx 使用cpu 核数

- BACKEND_PORT:后端kube-apiserver监听端口

- HOST_PORT:负载均衡器监听端口

- CP_HOSTS:kube-apiserver服务IP地址列表

- metrics端口:8404 prometheus 拉取数据使用

已有镜像:

- nginx 镜像:docker.io/juestnow/nginx-proxy:1.21.0

- haproxy镜像:docker.io/juestnow/haproxy-proxy:2.4.0

分发kube-ha-proxy.yaml 到所有节点:

|

1 2 3 4 5 6 7 8 9 10 |

cd /opt/k8s/work/yaml/ # server 节点 scp kube-ha-proxy.yaml root@192.168.0.12:/apps/work/kubernetes/manifests/ scp kube-ha-proxy.yaml root@192.168.0.13:/apps/work/kubernetes/manifests/ scp kube-ha-proxy.yaml root@192.168.0.14:/apps/work/kubernetes/manifests/ # node 节点 scp kube-ha-proxy.yaml root@192.168.0.x:/apps/work/kubernetes/manifests/ scp kube-ha-proxy.yaml root@192.168.0.x:/apps/work/kubernetes/manifests/ scp kube-ha-proxy.yaml root@192.168.0.x:/apps/work/kubernetes/manifests/ scp kube-ha-proxy.yaml root@192.168.0.x:/apps/work/kubernetes/manifests/ |

8、runtime组件

- docker

- containerd

- cri-o [本文档选择cri-o为runtime组件]

8.1 部署cri-o组件

cri-o 实现了 kubernetes 的 Container Runtime Interface (CRI) 接口,提供容器运行时核心功能,如镜像管理、容器管理等,相比 docker 更加简单、健壮和可移植。

containerd cadvisor接口无pod网络不能很直观的监控pod网络使用所以本文选择cri-o

|

1 |

注意:如果没有特殊指明,本文档的所有操作均在qist 节点上执行。 |

8.2 下载二进制文件

|

1 2 3 4 5 |

cd /opt/k8s/work wget https://storage.googleapis.com/cri-o/artifacts/cri-o.amd64.9b7f5ae815c22a1d754abfbc2890d8d4c10e240d.tar.gz tar -xvf cri-o.amd64.9b7f5ae815c22a1d754abfbc2890d8d4c10e240d.tar.gz |

8.3 修改配置文件

cri-o 配置文件生成:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 |

cd cri-o/etc cat > crio.conf <<EOF [crio] root = "/var/lib/containers/storage" runroot = "/var/run/containers/storage" log_dir = "/var/log/crio/pods" version_file = "/var/run/crio/version" version_file_persist = "/var/lib/crio/version" [crio.api] listen = "/var/run/crio/crio.sock" stream_address = "127.0.0.1" stream_port = "0" stream_enable_tls = false stream_tls_cert = "" stream_tls_key = "" stream_tls_ca = "" grpc_max_send_msg_size = 16777216 grpc_max_recv_msg_size = 16777216 [crio.runtime] default_ulimits = [ "nofile=65535:65535", "nproc=65535:65535", "core=-1:-1" ] default_runtime = "crun" no_pivot = false decryption_keys_path = "/apps/crio/keys/" conmon = "/apps/crio/bin/conmon" conmon_cgroup = "system.slice" conmon_env = [ "PATH=/apps/crio/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin", ] default_env = [ ] selinux = false seccomp_profile = "" apparmor_profile = "crio-default" cgroup_manager = "systemd" default_capabilities = [ "CHOWN", "MKNOD", "DAC_OVERRIDE", "NET_ADMIN", "NET_RAW", "SYS_CHROOT", "FSETID", "FOWNER", "SETGID", "SETUID", "SETPCAP", "NET_BIND_SERVICE", "KILL", ] default_sysctls = [ ] additional_devices = [ ] hooks_dir = [ "/apps/crio/containers/oci/hooks.d", ] default_mounts = [ ] pids_limit = 102400 log_size_max = -1 log_to_journald = false container_exits_dir = "/apps/crio/run/crio/exits" container_attach_socket_dir = "/var/run/crio" bind_mount_prefix = "" read_only = false log_level = "info" log_filter = "" uid_mappings = "" gid_mappings = "" ctr_stop_timeout = 30 manage_ns_lifecycle = true namespaces_dir = "/apps/crio/run" pinns_path = "/apps/crio/bin/pinns" [crio.runtime.runtimes.crun] runtime_path = "/apps/crio/bin/crun" runtime_type = "oci" runtime_root = "/apps/crio/run/crun" allowed_annotations = [ "io.containers.trace-syscall", ] [crio.image] default_transport = "docker://" global_auth_file = "" pause_image = "docker.io/juestnow/pause:3.5" pause_image_auth_file = "" pause_command = "/pause" signature_policy = "" image_volumes = "mkdir" [crio.network] network_dir = "/etc/cni/net.d" plugin_dirs = [ "/opt/cni/bin", ] [crio.metrics] enable_metrics = false metrics_port = 9090 EOF |

参数说明:

- root:容器镜像存放目录;

- runroot:容器运行目录;

- log_dir:容器日志默认存放目录 kubelet 指定目录就存放kubelet所指定目录;

- default_runtime:指定默认运行时;

- conmon:conmon 二进制文件的路径,用于监控 OCI 运行时;

- conmon_env:conmon 运行时的环境变量;

- hooks_dir:OCI hooks 目录;

- container_exits_dir:conmon 将容器出口文件写入其中的目录的路径;

- namespaces_dir:管理命名空间状态被跟踪的目录。仅在 manage_ns_lifecycle 为 true 时使用;

- pinns_path:pinns_path 是查找 pinns 二进制文件的路径,这是管理命名空间生命周期所必需的 ;

- runtime_path:运行时可执行文件的绝对路径 ;

- runtime_root:存放容器的根目录;

- pause_image:pause镜像路径;

- network_dir: cni 配置文件路径;

- plugin_dirs:cni 二进制文件存放路径;

- default runtime:使用crun

- 运行路径:/apps/crio 请根据自己环境修改

- 官网文档

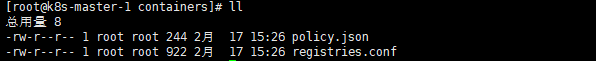

cri-o 启动其它所需配置文件生成

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

cd /opt/k8s/work/cri-o mkdir containers cd containers cat > policy.json <<EOF { "default": [ { "type": "insecureAcceptAnything" } ], "transports": { "docker-daemon": { "": [{"type":"insecureAcceptAnything"}] } } } EOF |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

cat >registries.conf <<EOF # This is a system-wide configuration file used to # keep track of registries for various container backends. # It adheres to TOML format and does not support recursive # lists of registries. # The default location for this configuration file is /etc/containers/registries.conf. # The only valid categories are: 'registries.search', 'registries.insecure', # and 'registries.block'. [registries.search] registries = ['registry.access.redhat.com', 'docker.io', 'registry.fedoraproject.org', 'quay.io', 'registry.centos.org'] # If you need to access insecure registries, add the registry's fully-qualified name. # An insecure registry is one that does not have a valid SSL certificate or only does HTTP. [registries.insecure] registries = [] # If you need to block pull access from a registry, uncomment the section below # and add the registries fully-qualified name. # # Docker only [registries.block] registries = [] EOF |

8.4 创建 cri-o systemd unit 文件

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

cd /opt/k8s/work cat >crio.service <<EOF [Unit] Description=OCI-based implementation of Kubernetes Container Runtime Interface Documentation=https://github.com/github.com/cri-o/cri-o [Service] Type=notify ExecStartPre=-/sbin/modprobe br_netfilter ExecStartPre=-/sbin/modprobe overlay ExecStart=/apps/crio/bin/crio --config /apps/crio/etc/crio.conf --log-level info Restart=on-failure RestartSec=5 LimitNOFILE=655350 LimitNPROC=655350 LimitCORE=infinity LimitMEMLOCK=infinity TasksMax=infinity Delegate=yes KillMode=process [Install] WantedBy=multi-user.target EOF |

8.5 分发文件

分发二进制文件及配置文件:

|

1 2 3 4 5 6 7 8 9 10 |

cd /opt/k8s/work/cri-o scp -r {bin,etc} root@192.168.0.12:/apps/crio scp -r {bin,etc} root@192.168.0.13:/apps/crio scp -r {bin,etc} root@192.168.0.14:/apps/crio #node节点 scp -r {bin,etc} root@192.168.0.x:/apps/crio scp -r {bin,etc} root@192.168.0.x:/apps/crio scp -r {bin,etc} root@192.168.3.x:/apps/crio scp -r {bin,etc} root@192.168.3.x:/apps/crio |

分发其它配置文件:

|

1 2 3 4 5 6 7 8 9 |

cd /opt/k8s/work/cri-o scp -r containers root@192.168.0.12:/etc/ scp -r containers root@192.168.0.13:/etc/ scp -r containers root@192.168.0.14:/etc/ scp -r containers root@192.168.2.x:/etc/ scp -r containers root@192.168.2.x:/etc/ scp -r containers root@192.168.3.x:/etc/ scp -r containers root@192.168.3.x:/etc/ |

分发启动文件:

|

1 2 3 4 5 6 7 8 9 |

cd /opt/k8s/work scp crio.service root@192.168.0.12:/usr/lib/systemd/system/crio.service scp crio.service root@192.168.0.13:/usr/lib/systemd/system/crio.service scp crio.service root@192.168.0.14:/usr/lib/systemd/system/crio.service scp crio.service root@192.168.0.185:/usr/lib/systemd/system/crio.service scp crio.service root@192.168.0.187:/usr/lib/systemd/system/crio.service scp crio.service root@192.168.3.62:/usr/lib/systemd/system/crio.service scp crio.service root@192.168.3.70:/usr/lib/systemd/system/crio.service |

8.6 启动cri-o 服务

|

1 2 3 4 5 6 7 8 |

# 全局刷新service systemctl daemon-reload # 设置cri-o开机启动 systemctl enable crio # 启动cri-o systemctl start crio # 重启cri-o systemctl restart crio |

8.7 检查启动结果(所有节点执行)

|

1 2 3 4 5 6 7 8 |

[root@k8s-master-1 work]# systemctl status crio|grep Active Active: active (running) since Thu 2022-02-17 15:29:49 CST; 6s ago [root@k8s-master-3 work]# systemctl status crio|grep Active Active: active (running) since Thu 2022-02-17 15:29:49 CST; 6s ago [root@k8s-master-2 ~]# systemctl status crio|grep Active Active: active (running) since Thu 2022-02-17 15:29:50 CST; 6s ago # 请自行全部节点检查 |

确保状态为 active (running),否则查看日志,确认原因:

|

1 |

journalctl -u crio |

8.8 创建和分发 crictl 配置文件

crictl 是兼容 CRI 容器运行时的命令行工具,提供类似于 docker 命令的功能。具体参考 官方文档

|

1 2 3 4 5 6 7 8 9 10 |

cd /opt/k8s/work cat << EOF | sudo tee crictl.yaml runtime-endpoint: "unix:///var/run/crio/crio.sock" image-endpoint: "unix:///var/run/crio/crio.sock" timeout: 10 debug: false pull-image-on-create: true disable-pull-on-run: false EOF |

分发到所有 节点:

|

1 2 3 4 5 6 7 8 9 |

cd /opt/k8s/work scp crictl.yaml root@192.168.0.12:/etc/crictl.yaml scp crictl.yaml root@192.168.0.13:/etc/crictl.yaml scp crictl.yaml root@192.168.0.14:/etc/crictl.yaml #node节点 scp crictl.yaml root@192.168.0.x:/etc/crictl.yaml scp crictl.yaml root@192.168.0.x:/etc/crictl.yaml scp crictl.yaml root@192.168.3.62:/etc/crictl.yaml scp crictl.yaml root@192.168.3.70:/etc/crictl.yaml |

8.9 验证cri-o是否能正常访问

|

1 2 3 4 5 6 7 8 9 10 11 12 |

#设置全局变量 vim /etc/profile export PATH=/apps/crio/bin:$PATH # 查询镜像 /apps/crio/bin/crictl images crictl images # pull 镜像 crictl pull docker.io/library/busybox:1.24 # 查看容器运行状态 crictl ps -a |

9、cni plugins 部署(容器运行时使用网络需要)

9.1 下载二进制文件

|

1 2 3 4 5 6 7 |

cd /opt/k8s/work mkdir -p cni/bin wget https://github.com/containernetworking/plugins/releases/download/v1.0.1/cni-plugins-linux-amd64-v1.0.1.tgz |

9.2 解压及分发二进制文件

|

1 2 3 |

cd /opt/k8s/work tar -xvf cni-plugins-linux-amd64-v1.0.1.tgz -C cni/bin |

分发文件到所有节点:

|

1 2 3 4 5 6 7 8 9 |

cd /opt/k8s/work scp -r cni root@192.168.0.12:/opt/ scp -r cni root@192.168.0.13:/opt/ scp -r cni root@192.168.0.14:/opt/ scp -r cni root@192.168.0.15:/opt/ scp -r cni root@192.168.0.16:/opt/ scp -r cni root@192.168.0.x:/opt/ scp -r cni root@192.168.0.x:/opt/ |

创建cni 配置文件目录

|

1 2 3 4 5 6 7 8 |

ssh root@192.168.0.12 mkdir -p /etc/cni/net.d ssh root@192.168.0.13 mkdir -p /etc/cni/net.d ssh root@192.168.0.14 mkdir -p /etc/cni/net.d ssh root@192.168.0.185 mkdir -p /etc/cni/net.d ssh root@192.168.0.187 mkdir -p /etc/cni/net.d ssh root@192.168.3.62 mkdir -p /etc/cni/net.d ssh root@192.168.3.70 mkdir -p /etc/cni/net.d |

10、部署 kube-apiserver 集群

本文档讲解部署一个三实例 kube-apiserver 集群的步骤.

|

1 2 3 4 5 6 |

集群规划: 服务网段:10.66.0.0/16 Pod 网段:10.80.0.0/12 集群域名:cluster.local 注意:如果没有特殊指明,本文档的所有操作均在 qist 节点上执行。 |

10.1 创建kube-apiserver 证书

创建证书签名请求:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

cd /opt/k8s/work cat > /opt/k8s/cfssl/k8s/k8s-apiserver.json << EOF { "CN": "kubernetes", "hosts": [ "192.168.0.12","192.168.0.13","192.168.0.14", "10.66.0.1", "192.168.0.12","127.0.0.1", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Shanghai", "L": "Shanghai", "O": "k8s", "OU": "Shooter" } ] } EOF |

hosts 字段指定授权使用该证书的 IP 和域名列表,这里列出了 master 节点 IP、kubernetes 服务的 IP 和域名

|

1 2 3 4 5 6 7 8 9 |

解析: 10.66.0.1:kube-apiserver service ip 一般是service第一个ip service-cluster-ip-range 参数 "192.168.0.12","192.168.0.13","192.168.0.14": #master 节点IP "192.168.0.12","127.0.0.1": #192.168.0.12 vip ip 方便客户端访问 本地127IP 能访问 kube-ha-proxy使用 "kubernetes.default.svc.cluster.local" :全局域名访问cluster.local 可以是其它域 |

生成 Kubernetes API Server 证书和私钥

|

1 2 3 4 5 6 7 |

cfssl gencert \ -ca=/opt/k8s/cfssl/pki/k8s/k8s-ca.pem \ -ca-key=/opt/k8s/cfssl/pki/k8s/k8s-ca-key.pem \ -config=/opt/k8s/cfssl/ca-config.json \ -profile=kubernetes \ /opt/k8s/cfssl/k8s/k8s-apiserver.json | \ cfssljson -bare /opt/k8s/cfssl/pki/k8s/k8s-server |

10.2 创建加密配置文件

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

# 生成 EncryptionConfig 所需的加密 key export ENCRYPTION_KEY=$(head -c 32 /dev/urandom | base64) cd /opt/k8s/work mkdir config cat > config/encryption-config.yaml << EOF kind: EncryptionConfig apiVersion: v1 resources: - resources: - secrets providers: - aescbc: keys: - name: key1 secret: ${ENCRYPTION_KEY} - identity: {} EOF |

10.3 创建 Kubernetes webhook 证书

|

1 2 3 |

汇聚层证书 kube-apiserver 的另一种访问方式就是使用 kubectl proxy 来代理访问, 而该证书就是用来支持SSL代理访问的. 在该种访问模式下, 我们是以http的方式发起请求到代理服务的, 此时, 代理服务会将该请求发送给 kube-apiserver, 在此之前, 代理会将发送给 kube-apiserver 的请求头里加入证书信息。 |

|

1 2 |

客户端 ---发起请求---> 代理 ---Add Header:发起请求---> kube-apiserver (客户端证书) (服务端证书) |

|

1 |

代理层(如汇聚层aggregator)使用此套代理证书来向 kube-apiserver 请求认证 |

创建证书签名请求:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

cd /opt/k8s/work cat > /opt/k8s/cfssl/k8s/aggregator.json << EOF { "CN": "aggregator", "hosts": [""], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Shanghai", "L": "Shanghai", "O": "k8s", "OU": "Shooter" } ] } EOF |

|

1 |

注意:CN 名称需要位于 kube-apiserver 的 --requestheader-allowed-names 参数中,否则后续访问 metrics 时会提示权限不足。 |

生成 Kubernetes webhook 证书和私钥

|

1 2 3 4 5 6 7 |

cfssl gencert \ -ca=/opt/k8s/cfssl/pki/k8s/k8s-ca.pem \ -ca-key=/opt/k8s/cfssl/pki/k8s/k8s-ca-key.pem \ -config=/opt/k8s/cfssl/ca-config.json \ -profile=kubernetes \ /opt/k8s/cfssl/k8s/aggregator.json | \ cfssljson -bare /opt/k8s/cfssl/pki/k8s/aggregator |

10.4 创建 kube-apiserver 配置文件

192.168.0.12节点:在k8s-master-1 节点上执行

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 |

cat >/apps/k8s/conf/kube-apiserver <<EOF KUBE_APISERVER_OPTS="--logtostderr=true \ --bind-address=192.168.0.12 \ --advertise-address=192.168.0.12 \ --secure-port=5443 \ --insecure-port=0 \ --service-cluster-ip-range=10.66.0.0/16 \ --service-node-port-range=30000-65535 \ --etcd-cafile=/apps/k8s/ssl/etcd/etcd-ca.pem \ --etcd-certfile=/apps/k8s/ssl/etcd/etcd-client.pem \ --etcd-keyfile=/apps/k8s/ssl/etcd/etcd-client-key.pem \ --etcd-prefix=/registry \ --etcd-servers=https://192.168.0.12:2379,https://192.168.0.13:2379,https://192.168.0.14:2379 \ --client-ca-file=/apps/k8s/ssl/k8s/k8s-ca.pem \ --tls-cert-file=/apps/k8s/ssl/k8s/k8s-server.pem \ --tls-private-key-file=/apps/k8s/ssl/k8s/k8s-server-key.pem \ --kubelet-client-certificate=/apps/k8s/ssl/k8s/k8s-server.pem \ --kubelet-client-key=/apps/k8s/ssl/k8s/k8s-server-key.pem \ --service-account-key-file=/apps/k8s/ssl/k8s/k8s-ca.pem \ --requestheader-client-ca-file=/apps/k8s/ssl/k8s/k8s-ca.pem \ --proxy-client-cert-file=/apps/k8s/ssl/k8s/aggregator.pem \ --proxy-client-key-file=/apps/k8s/ssl/k8s/aggregator-key.pem \ --service-account-issuer=https://kubernetes.default.svc.cluster.local \ --service-account-signing-key-file=/apps/k8s/ssl/k8s/k8s-ca-key.pem \ --requestheader-allowed-names=aggregator \ --requestheader-group-headers=X-Remote-Group \ --requestheader-extra-headers-prefix=X-Remote-Extra- \ --requestheader-username-headers=X-Remote-User \ --enable-aggregator-routing=true \ --anonymous-auth=false \ --experimental-encryption-provider-config=/apps/k8s/config/encryption-config.yaml \ --enable-admission-plugins=DefaultStorageClass,DefaultTolerationSeconds,LimitRanger,NamespaceExists,NamespaceLifecycle,NodeRestriction,PodNodeSelector,PersistentVolumeClaimResize,PodTolerationRestriction,ResourceQuota,ServiceAccount,StorageObjectInUseProtection,MutatingAdmissionWebhook,ValidatingAdmissionWebhook \ --disable-admission-plugins=ExtendedResourceToleration,ImagePolicyWebhook,LimitPodHardAntiAffinityTopology,NamespaceAutoProvision,Priority,EventRateLimit,PodSecurityPolicy \ --cors-allowed-origins=.* \ --enable-swagger-ui \ --runtime-config=api/all=true \ --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \ --authorization-mode=Node,RBAC \ --allow-privileged=true \ --apiserver-count=3 \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --default-not-ready-toleration-seconds=30 \ --default-unreachable-toleration-seconds=30 \ --audit-log-truncate-enabled \ --audit-log-path=/apps/k8s/log/api-server-audit.log \ --profiling \ --http2-max-streams-per-connection=10000 \ --event-ttl=1h \ --enable-bootstrap-token-auth=true \ --alsologtostderr=true \ --log-dir=/apps/k8s/log \ --v=2 \ --tls-cipher-suites=TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_128_GCM_SHA256 \ --endpoint-reconciler-type=lease \ --max-mutating-requests-inflight=500 \ --max-requests-inflight=1500 \ --target-ram-mb=300" EOF |

192.168.0.13节点:在k8s-master-2 节点上执行

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 |

cat >/apps/k8s/conf/kube-apiserver <<EOF KUBE_APISERVER_OPTS="--logtostderr=true \ --bind-address=192.168.0.13 \ --advertise-address=192.168.0.13 \ --secure-port=5443 \ --insecure-port=0 \ --service-cluster-ip-range=10.66.0.0/16 \ --service-node-port-range=30000-65535 \ --etcd-cafile=/apps/k8s/ssl/etcd/etcd-ca.pem \ --etcd-certfile=/apps/k8s/ssl/etcd/etcd-client.pem \ --etcd-keyfile=/apps/k8s/ssl/etcd/etcd-client-key.pem \ --etcd-prefix=/registry \ --etcd-servers=https://192.168.0.12:2379,https://192.168.0.13:2379,https://192.168.0.14:2379 \ --client-ca-file=/apps/k8s/ssl/k8s/k8s-ca.pem \ --tls-cert-file=/apps/k8s/ssl/k8s/k8s-server.pem \ --tls-private-key-file=/apps/k8s/ssl/k8s/k8s-server-key.pem \ --kubelet-client-certificate=/apps/k8s/ssl/k8s/k8s-server.pem \ --kubelet-client-key=/apps/k8s/ssl/k8s/k8s-server-key.pem \ --service-account-key-file=/apps/k8s/ssl/k8s/k8s-ca.pem \ --requestheader-client-ca-file=/apps/k8s/ssl/k8s/k8s-ca.pem \ --proxy-client-cert-file=/apps/k8s/ssl/k8s/aggregator.pem \ --proxy-client-key-file=/apps/k8s/ssl/k8s/aggregator-key.pem \ --service-account-issuer=https://kubernetes.default.svc.cluster.local \ --service-account-signing-key-file=/apps/k8s/ssl/k8s/k8s-ca-key.pem \ --requestheader-allowed-names=aggregator \ --requestheader-group-headers=X-Remote-Group \ --requestheader-extra-headers-prefix=X-Remote-Extra- \ --requestheader-username-headers=X-Remote-User \ --enable-aggregator-routing=true \ --anonymous-auth=false \ --experimental-encryption-provider-config=/apps/k8s/config/encryption-config.yaml \ --enable-admission-plugins=DefaultStorageClass,DefaultTolerationSeconds,LimitRanger,NamespaceExists,NamespaceLifecycle,NodeRestriction,PodNodeSelector,PersistentVolumeClaimResize,PodTolerationRestriction,ResourceQuota,ServiceAccount,StorageObjectInUseProtection,MutatingAdmissionWebhook,ValidatingAdmissionWebhook \ --disable-admission-plugins=ExtendedResourceToleration,ImagePolicyWebhook,LimitPodHardAntiAffinityTopology,NamespaceAutoProvision,Priority,EventRateLimit,PodSecurityPolicy \ --cors-allowed-origins=.* \ --enable-swagger-ui \ --runtime-config=api/all=true \ --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \ --authorization-mode=Node,RBAC \ --allow-privileged=true \ --apiserver-count=3 \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --default-not-ready-toleration-seconds=30 \ --default-unreachable-toleration-seconds=30 \ --audit-log-truncate-enabled \ --audit-log-path=/apps/k8s/log/api-server-audit.log \ --profiling \ --http2-max-streams-per-connection=10000 \ --event-ttl=1h \ --enable-bootstrap-token-auth=true \ --alsologtostderr=true \ --log-dir=/apps/k8s/log \ --v=2 \ --tls-cipher-suites=TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_128_GCM_SHA256 \ --endpoint-reconciler-type=lease \ --max-mutating-requests-inflight=500 \ --max-requests-inflight=1500 \ --target-ram-mb=300" EOF |

192.168.0.14节点:在k8s-master-3 节点上执行

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 |

cat >/apps/k8s/conf/kube-apiserver <<EOF KUBE_APISERVER_OPTS="--logtostderr=true \ --bind-address=192.168.0.14 \ --advertise-address=192.168.0.14 \ --secure-port=5443 \ --insecure-port=0 \ --service-cluster-ip-range=10.66.0.0/16 \ --service-node-port-range=30000-65535 \ --etcd-cafile=/apps/k8s/ssl/etcd/etcd-ca.pem \ --etcd-certfile=/apps/k8s/ssl/etcd/etcd-client.pem \ --etcd-keyfile=/apps/k8s/ssl/etcd/etcd-client-key.pem \ --etcd-prefix=/registry \ --etcd-servers=https://192.168.0.12:2379,https://192.168.0.13:2379,https://192.168.0.14:2379 \ --client-ca-file=/apps/k8s/ssl/k8s/k8s-ca.pem \ --tls-cert-file=/apps/k8s/ssl/k8s/k8s-server.pem \ --tls-private-key-file=/apps/k8s/ssl/k8s/k8s-server-key.pem \ --kubelet-client-certificate=/apps/k8s/ssl/k8s/k8s-server.pem \ --kubelet-client-key=/apps/k8s/ssl/k8s/k8s-server-key.pem \ --service-account-key-file=/apps/k8s/ssl/k8s/k8s-ca.pem \ --requestheader-client-ca-file=/apps/k8s/ssl/k8s/k8s-ca.pem \ --proxy-client-cert-file=/apps/k8s/ssl/k8s/aggregator.pem \ --proxy-client-key-file=/apps/k8s/ssl/k8s/aggregator-key.pem \ --service-account-issuer=https://kubernetes.default.svc.cluster.local \ --service-account-signing-key-file=/apps/k8s/ssl/k8s/k8s-ca-key.pem \ --requestheader-allowed-names=aggregator \ --requestheader-group-headers=X-Remote-Group \ --requestheader-extra-headers-prefix=X-Remote-Extra- \ --requestheader-username-headers=X-Remote-User \ --enable-aggregator-routing=true \ --anonymous-auth=false \ --experimental-encryption-provider-config=/apps/k8s/config/encryption-config.yaml \ --enable-admission-plugins=DefaultStorageClass,DefaultTolerationSeconds,LimitRanger,NamespaceExists,NamespaceLifecycle,NodeRestriction,PodNodeSelector,PersistentVolumeClaimResize,PodTolerationRestriction,ResourceQuota,ServiceAccount,StorageObjectInUseProtection,MutatingAdmissionWebhook,ValidatingAdmissionWebhook \ --disable-admission-plugins=ExtendedResourceToleration,ImagePolicyWebhook,LimitPodHardAntiAffinityTopology,NamespaceAutoProvision,Priority,EventRateLimit,PodSecurityPolicy \ --cors-allowed-origins=.* \ --enable-swagger-ui \ --runtime-config=api/all=true \ --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \ --authorization-mode=Node,RBAC \ --allow-privileged=true \ --apiserver-count=3 \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --default-not-ready-toleration-seconds=30 \ --default-unreachable-toleration-seconds=30 \ --audit-log-truncate-enabled \ --audit-log-path=/apps/k8s/log/api-server-audit.log \ --profiling \ --http2-max-streams-per-connection=10000 \ --event-ttl=1h \ --enable-bootstrap-token-auth=true \ --alsologtostderr=true \ --log-dir=/apps/k8s/log \ --v=2 \ --tls-cipher-suites=TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_128_GCM_SHA256 \ --endpoint-reconciler-type=lease \ --max-mutating-requests-inflight=500 \ --max-requests-inflight=1500 \ --target-ram-mb=300" EOF |

参数解析:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

--advertise-address:apiserver 对外通告的 IP(kubernetes 服务后端节点 IP); --default-*-toleration-seconds:设置节点异常相关的阈值; --max-*-requests-inflight:请求相关的最大阈值; --etcd-*:访问 etcd 的证书和 etcd 服务器地址; --bind-address: https 监听的 IP,不能为 127.0.0.1,否则外界不能访问它的安全端口 5443; --secret-port:https 监听端口; --insecure-port=0:关闭监听 http 非安全端口(8080); --tls-*-file:指定 apiserver 使用的证书、私钥和 CA 文件; --audit-*:配置审计策略和审计日志文件相关的参数; --client-ca-file:验证 client (kue-controller-manager、kube-scheduler、kubelet、kube-proxy 等)请求所带的证书; --enable-bootstrap-token-auth:启用 kubelet bootstrap 的 token 认证; --requestheader-*:kube-apiserver 的 aggregator layer 相关的配置参数,proxy-client & HPA 需要使用; --requestheader-client-ca-file:用于签名 --proxy-client-cert-file 和 --proxy-client-key-file 指定的证书;在启用了 metric aggregator 时使用; --requestheader-allowed-names:不能为空,值为逗号分割的 --proxy-client-cert-file 证书的 CN 名称,这里设置为 “aggregator”; --service-account-key-file:签名 ServiceAccount Token 的公钥文件,kube-controller-manager 的 --service-account-private-key-file 指定私钥文件,两者配对使用; --runtime-config=api/all=true: 启用所有版本的 APIs,如 autoscaling/v2alpha1; --authorization-mode=Node,RBAC、--anonymous-auth=false: 开启 Node 和 RBAC 授权模式,拒绝未授权的请求; --enable-admission-plugins:启用一些默认关闭的 plugins; --allow-privileged:运行执行 privileged 权限的容器; --apiserver-count=3:指定 apiserver 实例的数量; --event-ttl:指定 events 的保存时间; --kubelet-*:如果指定,则使用 https 访问 kubelet APIs;需要为证书对应的用户(上面 kubernetes*.pem 证书的用户为 kubernetes) 用户定义 RBAC 规则,否则访问 kubelet API 时提示未授权; --proxy-client-*:apiserver 访问 metrics-server 使用的证书; --service-cluster-ip-range: 指定 Service Cluster IP 地址段; --service-node-port-range: 指定 NodePort 的端口范围; 注意:如果 kube-apiserver 机器没有运行 kube-proxy,则还需要添加 --enable-aggregator-routing=true 参数; |

10.5 分发kube-apiserver 证书及配置

证书分发

|

1 2 3 4 5 6 7 8 9 |

# 分发server 证书 scp -r /opt/k8s/cfssl/pki/k8s/k8s-server* root@192.168.0.12:/apps/k8s/ssl/k8s scp -r /opt/k8s/cfssl/pki/k8s/k8s-server* root@192.168.0.13:/apps/k8s/ssl/k8s scp -r /opt/k8s/cfssl/pki/k8s/k8s-server* root@192.168.0.14:/apps/k8s/ssl/k8s # 分发webhook证书 scp -r /opt/k8s/cfssl/pki/k8s/aggregator* root@192.168.0.12:/apps/k8s/ssl/k8s scp -r /opt/k8s/cfssl/pki/k8s/aggregator* root@192.168.0.13:/apps/k8s/ssl/k8s scp -r /opt/k8s/cfssl/pki/k8s/aggregator* root@192.168.0.14:/apps/k8s/ssl/k8s |

配置分发

|

1 2 3 4 |

cd /opt/k8s/work scp -r config root@192.168.0.12:/apps/k8s/ scp -r config root@192.168.0.13:/apps/k8s/ scp -r config root@192.168.0.14:/apps/k8s/ |

10.6 创建 kube-apiserver systemd unit 文件

k8s-master-1 k8s-master-2 k8s-master-3 节点上分别执行

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

cat > /usr/lib/systemd/system/kube-apiserver.service <<EOF [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] Type=notify LimitNOFILE=655350 LimitNPROC=655350 LimitCORE=infinity LimitMEMLOCK=infinity EnvironmentFile=-/apps/k8s/conf/kube-apiserver ExecStart=/apps/k8s/bin/kube-apiserver \$KUBE_APISERVER_OPTS Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF |

10.7 启动 kube-apiserver 服务

k8s-master-1 k8s-master-2 k8s-master-3 节点上分别执行

|

1 2 3 4 5 6 7 8 |

# 全局刷新service systemctl daemon-reload # 设置kube-apiserver开机启动 systemctl enable kube-apiserver # 启动kube-apiserver systemctl start kube-apiserver # 重启kube-apiserver systemctl restart kube-apiserver |

10.8 检查启动结果

k8s-master-1 k8s-master-2 k8s-master-3 节点上分别执行

|

1 2 3 4 5 6 7 8 9 10 |

[root@k8s-master-1 work]# systemctl status kube-apiserver|grep Active Active: active (running) since Thu 2022-02-17 16:15:12 CST; 10s ago [root@k8s-master-2 ~]# systemctl status kube-apiserver|grep Active Active: active (running) since Thu 2022-02-17 16:15:12 CST; 11s ago [root@k8s-master-3 work]# systemctl status kube-apiserver|grep Active Active: active (running) since Thu 2022-02-17 16:15:13 CST; 10s ago |

确保状态为 active (running),否则查看日志,确认原因:

|

1 |

journalctl -u kube-apiserver |

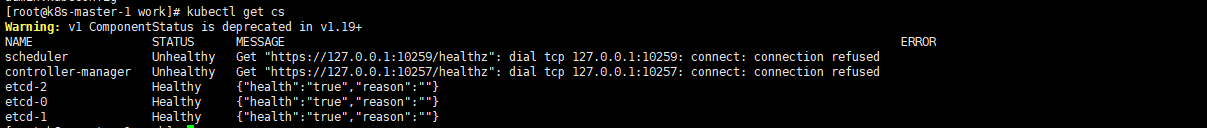

10.9 验证服务状态

qist 节点上执行( 部署完 kube-apiserver 集群后,在任一 qist 节点上执行如下命令):

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# 配置环境变量 export KUBECONFIG=/opt/k8s/kubeconfig/admin.kubeconfig [root@k8s-master-1 work]# kubectl get cs Warning: v1 ComponentStatus is deprecated in v1.19+ NAME STATUS MESSAGE ERROR scheduler Unhealthy Get "https://127.0.0.1:10259/healthz": dial tcp 127.0.0.1:10259: connect: connection refused controller-manager Unhealthy Get "https://127.0.0.1:10257/healthz": dial tcp 127.0.0.1:10257: connect: connection refused etcd-2 Healthy {"health":"true","reason":""} etcd-0 Healthy {"health":"true","reason":""} etcd-1 Healthy {"health":"true","reason":""} |

scheduler controller-manager 还没部署所以报错

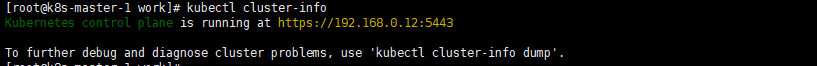

|

1 2 3 4 5 6 7 8 9 |

kubectl cluster-info 预期输出: [root@k8s-master-1 work]# kubectl cluster-info Kubernetes control plane is running at https://192.168.0.12:5443 To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'. 正常输出表示集群正常 |

后续请移步至第二篇文章

- 本文固定链接: https://www.yoyoask.com/?p=7581

- 转载请注明: shooter 于 SHOOTER 发表