安装顺序

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

#创建ns kubectl create namespace monitoring @@@ custom-metrics-api 要先于prometheus-adapter 部署 具体顺序如下: custom-metrics-api prometheus-adapter kube-state-metrics prometheus process-exporter node-exporter alertmanager blackbox-exporter grafana |

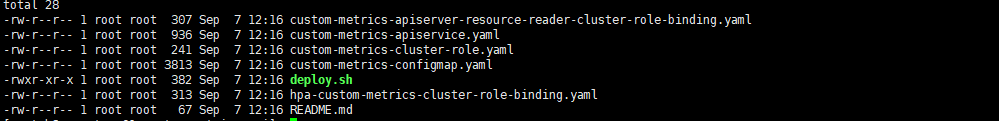

一.部署custom-metrics-api

|

1 2 3 4 |

chmod a+x deploy.sh ./deploy.sh #执行脚本部署 # 或者直接执行 kubectl apply -f . |

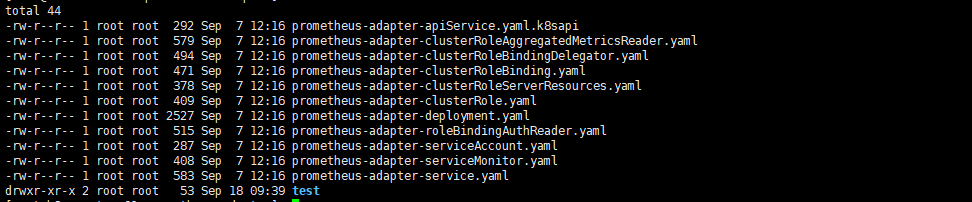

二.部署prometheus-adapter( 系统监控和警报工具箱,存放监控数据 )

|

1 2 3 4 |

cd prometheus-adapter kubectl apply -f . #需要测试可以执行下test文件夹下 |

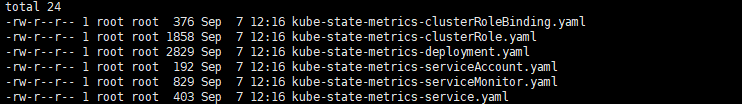

三.部署kube-state-metrics

|

1 |

我们服务在运行过程中,我们想了解服务运行状态,pod有没有重启,伸缩有没有成功,pod的状态是怎么样的等,这时就需要kube-state-metrics,它主要关注deployment,、node 、 pod等内部对象的状态。而metrics-server 主要用于监测node,pod等的CPU,内存,网络等系统指标。 |

|

1 2 |

直接执行 kubectl apply -f . |

四.部署prometheus

|

1 |

cd prometheus/ |

4.1 创建etcd 证书secret

|

1 |

将先前安装集群生成的etcd证书 复制一份到/opt/etcd/cfssl/pki/etcd/ 这个目录下然后执行如下命令。没有目录就率先创建。 |

|

1 2 3 4 5 6 7 8 9 |

kubectl -n monitoring create secret generic etcd-certs \ --from-file=/opt/etcd/cfssl/pki/etcd/etcd-ca.pem \ --from-file=/opt/etcd/cfssl/pki/etcd/etcd-client.pem \ --from-file=/opt/etcd/cfssl/pki/etcd/etcd-client-key.pem ## kubeadm 安装etcd 证书地址 记得修改prometheus-configmap.yaml etcd 证书路径 kubectl -n monitoring create secret generic etcd-certs \ --from-file=/etc/kubernetes/pki/etcd/ca.crt \ --from-file=/etc/kubernetes/pki/apiserver-etcd-client.crt \ --from-file=/etc/kubernetes/pki/apiserver-etcd-client.key |

4.2 创建监控rule

|

1 2 3 4 5 6 7 |

# rule 为规则存放文件夹 kubectl -n monitoring create configmap prometheus-k8s-rulefiles --from-file rule ## 报警规则更新 # 删除监控报警规则 kubectl -n monitoring delete configmap prometheus-k8s-rulefiles # 再次创建规则 实现报警规则更新 kubectl -n monitoring create configmap prometheus-k8s-rulefiles --from-file rule |

4.3 部署 prometheus

|

1 2 3 |

# 进入prometheus 目录 # blackbox-exporter-files-discover.yaml 文件为监控外部站点配置文件 # rule 规则目录文件夹 |

prometheus 修改存储 现在使用临时存储 生产记得修改 prometheus.yaml 修改

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

volumeClaimTemplates: - metadata: name: prometheus-k8s-db annotations: volume.beta.kubernetes.io/storage-class: "nfs-csi" spec: accessModes: [ "ReadWriteMany" ] storageClassName: nfs-csi resources: requests: storage: 200Gi volumeMode: Filesystem |

|

1 2 |

#说明 prometheus-Ingress.yaml #修改为自己的域名方便外部访问 |

|

1 |

kubectl apply -f . |

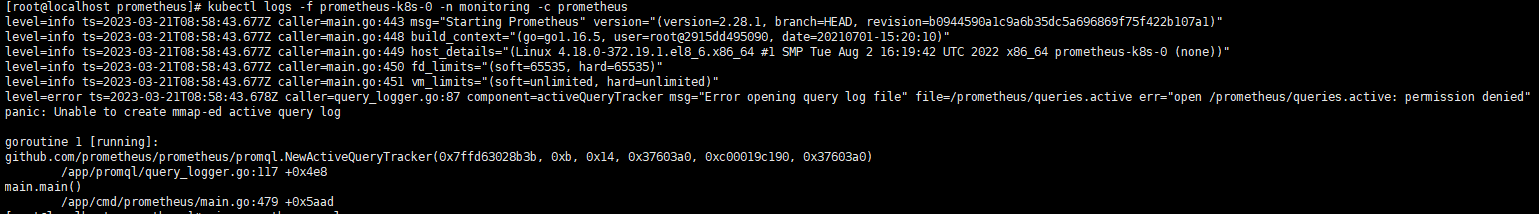

存储可能会遇到的错误:

|

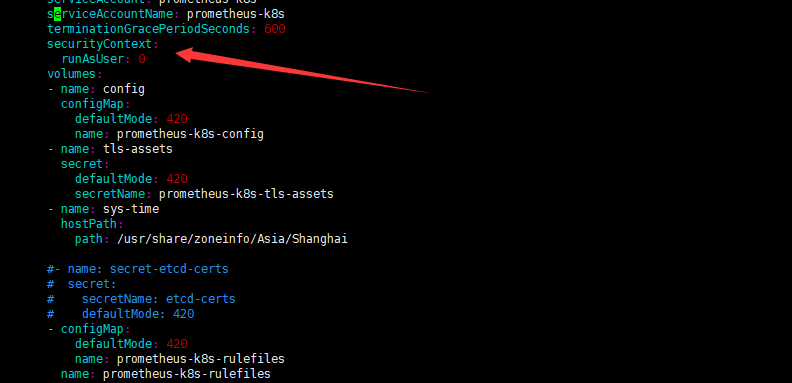

1 2 3 4 5 6 7 8 9 |

打开查询日志文件“file=/prometeus/queries.active err=”打开/prometeus/queries.aactive:拒绝权限“时出错 panic:无法创建mmap-ed活动查询日志 解决办法:通过 securityContext 来为 Pod 设置下 volumes 的权限,通过设置 runAsUser=0 指定运行的用户为 root: 在修改prometheus.yaml中添加: securityContext: runAsUser: 0 |

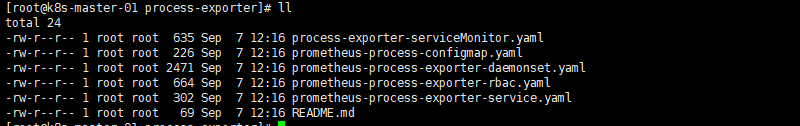

五.部署process-exporter(进程监控)

|

1 2 |

直接执行 kubectl apply -f . |

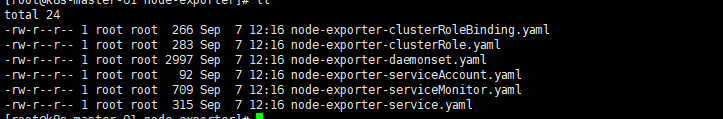

六.部署node-exporter( 各节点的关键数据指标状态数据 )

|

1 2 |

直接执行 kubectl apply -f . |

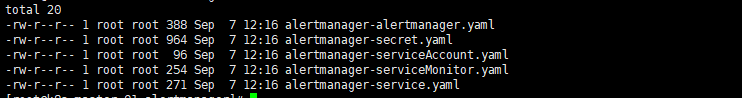

七.部署alertmanager(告警插件)

|

1 2 |

直接执行 kubectl apply -f . |

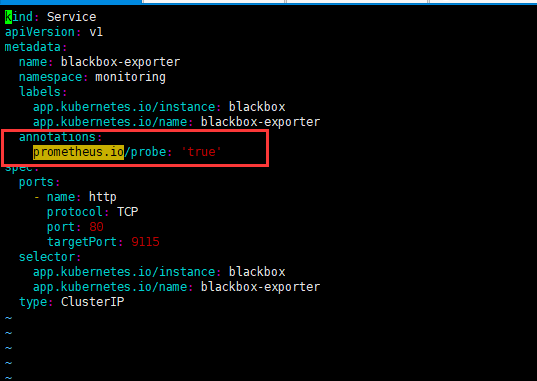

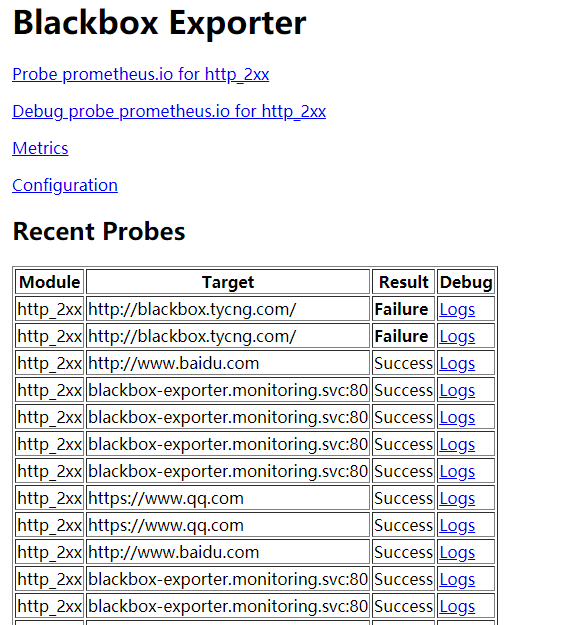

八.部署blackbox-exporter(黑盒监测)

blackbox-exporter 用来监控集群的service和ingresses等 (自动发现机制)

8.1部署前请先修改 blackbox-exporter-service.yaml

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

vim blackbox-exporter-service.yaml 添加 annotations: prometheus.io/probe: 'true' 添加完成后文件如下: -------------------------- kind: Service apiVersion: v1 metadata: name: blackbox-exporter namespace: monitoring labels: app.kubernetes.io/instance: blackbox app.kubernetes.io/name: blackbox-exporter annotations: prometheus.io/scrape: 'true' prometheus.io/scheme: 'https' prometheus.io/port: '9115' prometheus.io/web: 'true' prometheus.io/tls: 'https' prometheus.io/tcp: 'true' prometheus.io/icmp: 'true' prometheus.io/probe: 'true' spec: ports: - name: https port: 9115 targetPort: https - name: http port: 19115 targetPort: http selector: app.kubernetes.io/instance: blackbox app.kubernetes.io/name: blackbox-exporter type: ClusterIP |

8.2再修改 blackbox-exporter-Ingress.yaml

|

1 |

vim blackbox-exporter-Ingress.yaml |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

--------------------------------------------- ingresses 也需添加 annotations: prometheus.io/probed: 'true' --------------------------------------------- 添加后如: kind: Ingress apiVersion: networking.k8s.io/v1 metadata: name: blackbox-exporter namespace: monitoring annotations: prometheus.io/probed: 'true' spec: rules: - host: blackbox.shooter.com http: paths: - pathType: ImplementationSpecific path: / backend: service: name: blackbox-exporter port: name: http |

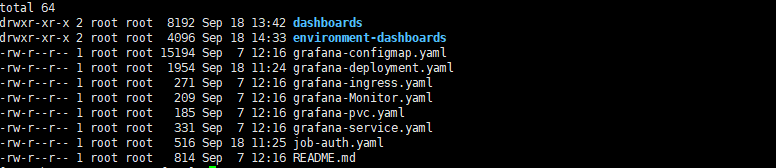

九.部署grafana

|

1 2 3 4 |

# grafana-ingress 对外服务域名请修改文件 grafana-ingress.yaml 域名改成自己的 # 直接执行 kubectl apply -f . |

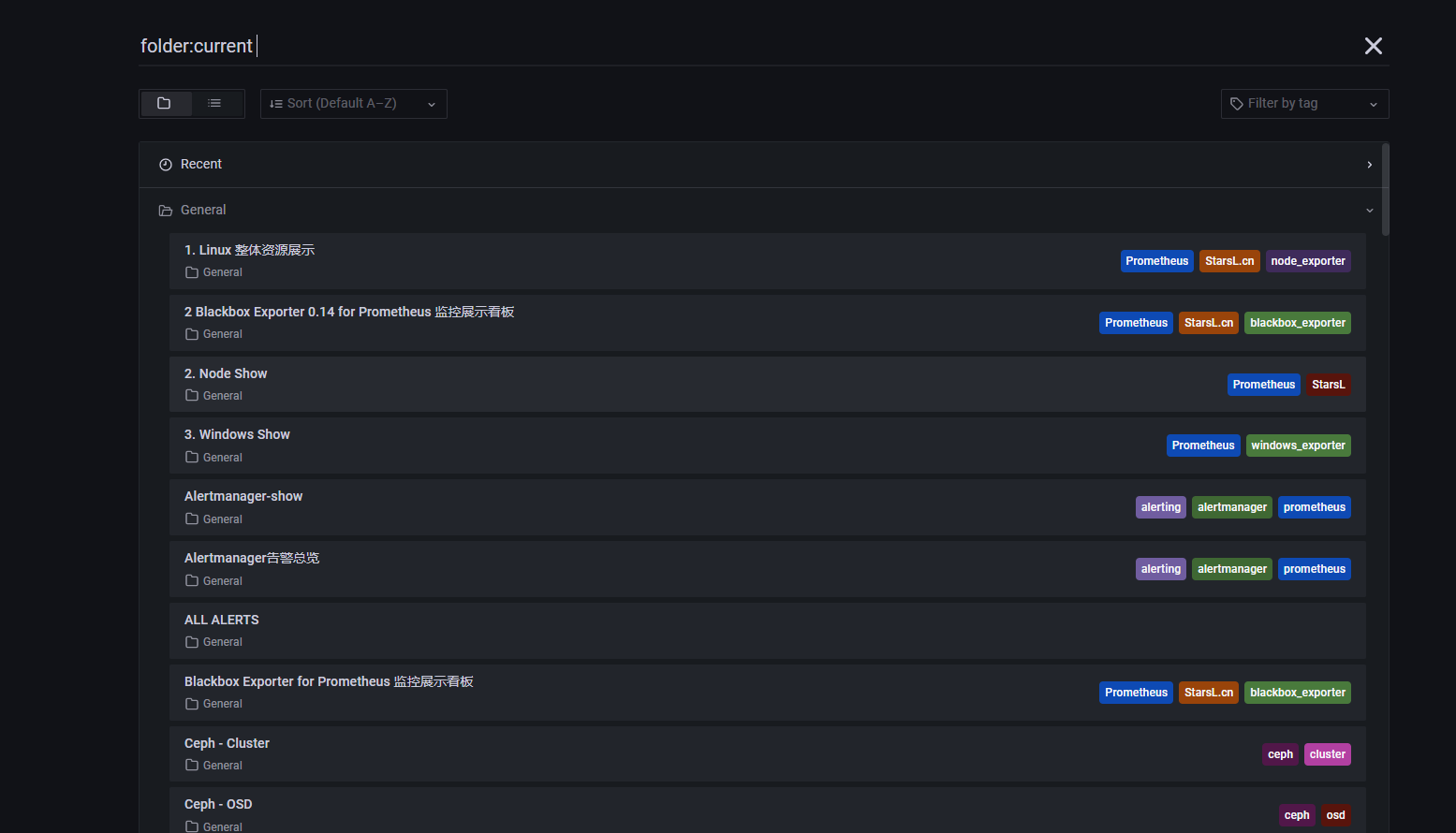

9.1导入 dashboards

|

1 2 3 4 5 6 7 |

cd dashboards chmod a+x import-dashboards.sh vim import-dashboards.sh 修改import-dashboards.sh 改成自己域名或者IP+端口 然后执行import-dashboards.sh 脚本,如果没有修改执行会失败 #执行 ./import-dashboards.sh |

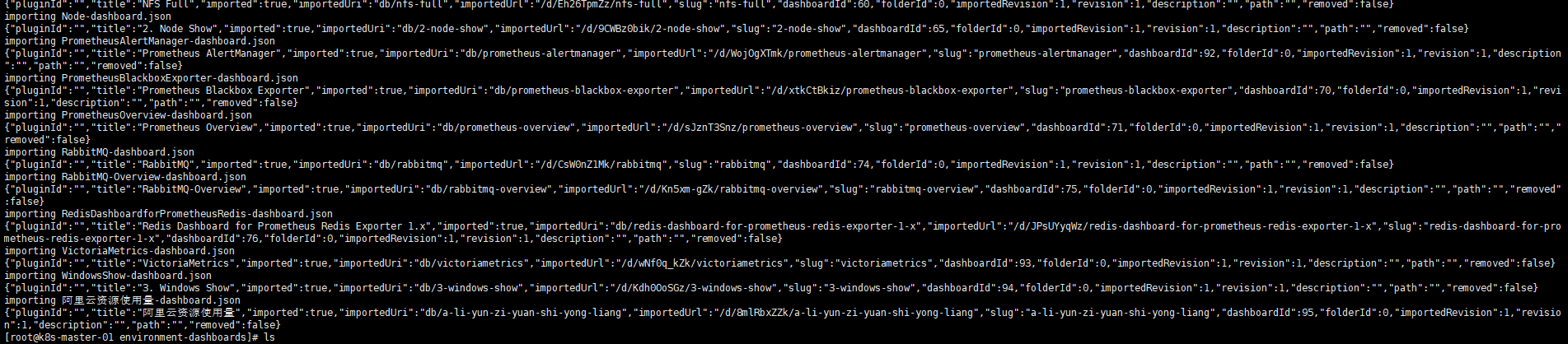

9.2导入仪表板

|

1 2 3 4 5 6 7 |

cd environment-dashboards chmod a+x import-dashboards.sh vim import-dashboards.sh # 修改import-dashboards.sh 改成自己域名或者IP+端口 然后执行import-dashboards.sh 脚本,如果没有修改执行会失败 #执行 ./import-dashboards.sh |

注意:

|

1 2 3 4 5 6 7 8 9 10 |

#如果有导入错误的请手动导入错误文件 kubectl get pod -n monitoring | grep grafana kubectl exec -ti grafana-84668b789-v5slh /bin/sh # 安装插件 grafana-cli plugins install grafana-piechart-panel # 多环境 使用 environment-dashboards 目录下展示 # kill 1 号进程重启grafana # mysql 监控请添加参数 --collect.perf_schema.file_events --collect.perf_schema.eventswaits --collect.perf_schema.indexiowaits --collect.perf_schema.tableiowaits --collect.perf_schema.tablelocks --collect.info_schema.processlist |

注意错误:

|

1 2 3 4 |

Error: ✗ Get "https://grafana.com/api/plugins/repo/grafana-clock-panel": context deadline exceeded (Client.Timeout exceeded while awaiting headers) 如果遇到此错误,无法请求到插件地址安装插件(外网超时),请注释掉env |

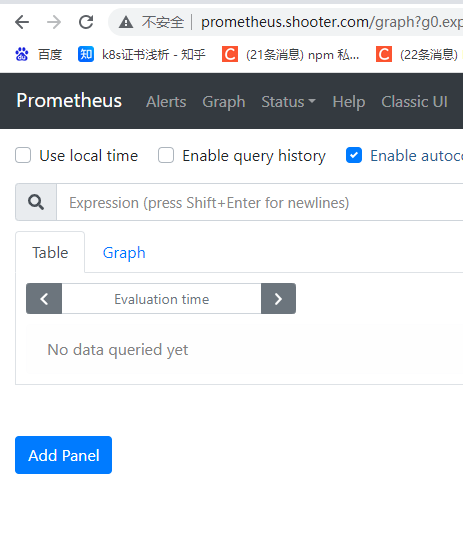

安装完成后添加host映射

|

1 2 3 |

192.168.0.177 prometheus.shooter.com 192.168.0.177 monitor.shooter.com 192.168.0.177 blackbox.shooter.com |

访问

Grafana

BlackBox

|

1 |

打开grafana已经有了资源,不禁感叹大佬的导入大法牛逼,数据源都自动添加 |

原文出自:https://github.com/qist/k8s/tree/main/k8s-yaml/kube-prometheus

十. service 监控写法

10.1 集群 内部 Service 监控

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

cat << EOF | kubectl apply -f - apiVersion: v1 kind: Service metadata: labels: app: prometheus-k8s name: prometheus-k8s annotations: prometheus.io/scrape: 'true' # 多端口 prometheus.io/port: '9090' # 监控路径 prometheus.io/path: '/metrics' # 监控http协议 prometheus.io/scheme: 'http' 或者https namespace: monitoring spec: ports: - name: web port: 9090 targetPort: web selector: app: prometheus-k8s sessionAffinity: ClientIP EOF |

10.2 集群外部 Service 模式监控

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

cat << EOF | kubectl apply -f - apiVersion: v1 kind: Service metadata: annotations: prometheus.io/port: "102 " prometheus.io/scrape: "true" prometheus.io/scheme: "https" #http labels: k8s-app: kube name: kube namespace: monitoring spec: clusterIP: None ports: - name: https-metrics port: 102 protocol: TCP targetPort: 102 sessionAffinity: None type: ClusterIP --- apiVersion: v1 kind: Endpoints metadata: labels: k8s-app: kube name: kube namespace: monitoring subsets: - addresses: - ip: 192.168.2.175 - ip: 192.168.2.176 - ip: 192.168.2.177 ports: - name: https-metrics port: 102 protocol: TCP EOF |

10.3 etcd 独立tls 监控

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

# etcd 独立tls 监控 cat << EOF | kubectl apply -f - --- apiVersion: v1 kind: Service metadata: labels: k8s-app: etcd name: etcd namespace: monitoring spec: clusterIP: None ports: - name: https-metrics port: 2379 protocol: TCP targetPort: 2379 sessionAffinity: None type: ClusterIP --- apiVersion: v1 kind: Endpoints metadata: labels: k8s-app: etcd name: etcd namespace: monitoring subsets: - addresses: - ip: 192.168.2.175 - ip: 192.168.2.176 - ip: 192.168.2.177 ports: - name: https-metrics port: 2379 protocol: TCP EOF |

10.4 POD 监控写法

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 |

apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: component: kube-apiserver-ha-proxy tier: control-plane annotations: prometheus.io/port: "8404" # 多端口指定监控端口 prometheus.io/port: '8084' # 监控路径 prometheus.io/path: '/metrics' # 监控http协议 prometheus.io/scheme: 'http' 或者https prometheus.io/scrape: "true" name: kube-apiserver-ha-proxy namespace: kube-system spec: containers: - args: - "CP_HOSTS=192.168.2.175,192.168.2.176,192.168.2.177" image: docker.io/juestnow/nginx-proxy:1.21.0 imagePullPolicy: IfNotPresent name: kube-apiserver-ha-proxy env: - name: CPU_NUM value: "4" - name: BACKEND_PORT value: "5443" - name: HOST_PORT value: "6443" - name: CP_HOSTS value: "192.168.2.175,192.168.2.176,192.168.2.177" hostNetwork: true priorityClassName: system-cluster-critical status: {} ### daemonsets deployments statefulsets 写法 spec: selector: matchLabels: k8s-app: traefik template: metadata: creationTimestamp: null labels: k8s-app: traefik annotations: prometheus.io/port: '8080' # 多端口指定监控端口 prometheus.io/port: '8080' # 监控路径 prometheus.io/path: '/metrics' # 监控http协议 prometheus.io/scheme: 'http' 或者https prometheus.io/scrape: 'true' |

十一. blackbox-exporter 集群内部监控注释方法

11.1 service 写法 http 模式 监控

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

cat << EOF | kubectl apply -f - apiVersion: v1 kind: Service metadata: labels: app: prometheus-k8s name: prometheus-k8s annotations: prometheus.io/web: 'true' # 开启http 监控 # prometheus.io/tls: 'https' # 默认http # 多端口指定监控端口 prometheus.io/port: '9090' prometheus.io/healthz: "/-/healthy" #监控url namespace: monitoring spec: ports: - name: web port: 9090 targetPort: web selector: app: prometheus-k8s sessionAffinity: ClientIP EOF |

11.2 service 写法 tcp 模式 监控

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

cat << EOF | kubectl apply -f - apiVersion: v1 kind: Service metadata: labels: app: prometheus-k8s name: prometheus-k8s annotations: prometheus.io/tcp: 'true' # 开启tcp 监控 所以端口都会监控 namespace: monitoring spec: ports: - name: web port: 9090 targetPort: web selector: app: prometheus-k8s sessionAffinity: ClientIP EOF |

11.3 service 写法 icmp 模式 监控

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

cat << EOF | kubectl apply -f - apiVersion: v1 kind: Service metadata: labels: app: prometheus-k8s name: prometheus-k8s annotations: prometheus.io/icmp: 'true' # ping service name namespace: monitoring spec: ports: - name: web port: 9090 targetPort: web selector: app: prometheus-k8s sessionAffinity: ClientIP EOF |

11.4 blackbox-exporter 集群内部ingresses监控注释方法

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

cat << EOF | kubectl apply -f - kind: Ingress apiVersion: networking.k8s.io/v1 metadata: name: prometheus-dashboard annotations: prometheus.io/probed: 'true' # 开启ingresses 监控 namespace: monitoring spec: rules: - host: prometheus.tycng.com http: paths: - pathType: ImplementationSpecific path: / backend: service: name: prometheus-k8s port: number: 9090 EOF |

11.5 blackbox-exporter 集群外部域名等其他资源监控

|

1 2 3 4 |

# 修改 blackbox-exporter-files-discover.yaml cd prometheus kubectl apply -f blackbox-exporter-files-discover.yaml # 会自动刷新 |

11.6 master

|

1 2 |

kube-controller-manager kube-scheduler 部署到相同机器实现自动发现监控 kube-controller-manager kube-scheduler 不需额外配置监控 |

- 本文固定链接: https://www.yoyoask.com/?p=10150

- 转载请注明: shooter 于 SHOOTER 发表